Today, the connection between industry and the internet is becoming increasingly close, and intelligent industry has become the next trend in industry. In both China's Made in 2025 and Germany's Industry 4.0 plans, robots are an essential part of building a strong manufacturing country. After the initial stage of being controlled as simple production tools, robots are developing towards having multiple sensing abilities, autonomous decision-making, and efficient communication and intelligent collaboration with humans. At the same time, the workspace and range of robots are continually expanding, and they are increasingly entering unstructured environments in human production and life. The integration of robots and humans will become a fundamental characteristic of the next generation of robots. Human-robot integration means that humans and robots coexist in the same natural space, coordinating closely with each other. Under the premise of ensuring human safety, robots can autonomously improve their skills and achieve natural interaction with people. For workers, robots are no longer just production tools but assistants, and hence collaborative robots are often referred to as "Cobots."

Main issues with traditional industrial robots include:

① The deployment cost for traditional robots is high. Industrial robots, designed to perform repetitive tasks, rely on high repeat positioning accuracy and a fixed external environment. Configuration, programming, maintenance, and the design of a fixed external environment for robots entail significant costs.

② Traditional robots cannot meet the needs of small and medium-sized enterprises (SMEs). Traditional industrial robots are primarily designed for large-scale production enterprises, such as automobile manufacturers, where products are standardized and the production line does not require major changes for an extended period, essentially eliminating the need for reprogramming or redeployment. In contrast, SMEs typically have characteristics such as small batch sizes, customization, and short production cycles, which demand that robots can be deployed quickly and are easier to handle.

③ Traditional robots cannot meet the emerging demands of the collaborative market. Traditional industrial robots are designed for high precision and high speed in completing tasks, and safety when humans are in the same working environment was not a primary concern for these robots, which typically simply used fences to isolate robots from humans. However, with the rising cost of labor, industries that previously used robots less frequently, such as 3C (computer, communications, and consumer electronics), medical, and food, have also begun to seek automation solutions. The characteristics of these industries' work involve handling a wide variety of products and require high flexibility and dexterity from the operators, which traditional robots cannot meet. In response to these characteristics, collaborative robots have emerged and are showing a growing trend. Collaborative robots can work together with humans, with humans responsible for tasks requiring higher flexibility and dexterity, while robots handle repetitive work. They also possess safety features that enable collaboration without the need for fences to ensure safety between humans and robots.

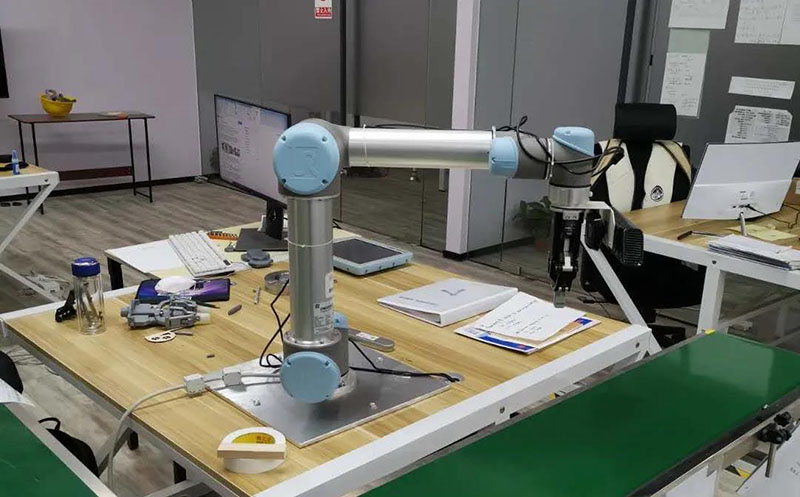

Thanks to their safety, ease of use, and openness, collaborative robots have a wide range of applications in both industrial and non-industrial fields. Not only can they meet the needs of the emerging small electronics industry for miniaturization and precision, performing tasks such as material handling and product inspection, but collaborative robots are also applicable in commercial (non-industrial) areas such as picking in warehousing logistics, surgical assistance in the medical industry, and massage scenarios, working alongside humans in a co-existing environment.

To ensure the implementation of collaborative robot functions, it is necessary to plan the robot's motion trajectory. At present, robot trajectory planning has a high professional barrier for average users, which limits the large-scale promotion of collaborative robots. To address this issue, the teach-and-learn method provides a simple and direct way of trajectory planning: the machine "observes" a human's motion demonstration and then combines it with appropriate machine learning methods to model and learn, thereby acquiring the ability to complete the corresponding tasks, significantly reducing the learning and usage costs for users.

The teach-and-learn method requires several processes. Before learning, different robotic systems need to select appropriate data acquisition methods to record teaching data. During the learning phase, due to the mismatch in size and motion capabilities between humans and robots, some systems need to address the human-robot correspondence issue in teaching data. Then, modeling teaching data to obtain a motion model that adapts to different scenarios is the focus of teach-and-learn. Finally, the learning method needs to solve how to use the learned model to generate motion trajectories that adapt to new task scenarios.

In this process, acquiring high-precision motion data is crucial. The methods for obtaining teaching-learning data can be divided into two major categories: The first category is the mapping method, which involves capturing human motion data through motion capture systems, optical sensors, data gloves, and other sensors, and then mapping these data onto robot actions. This method is straightforward, intuitive, and yields smooth motion trajectories, and is generally used for robot systems that have similar structures to the human body. The second category is the non-mapping method, which involves directly manipulating the robot arm (haptic teaching) or controlling the robot arm with a teaching pendant or joystick (remote control teaching) to record motion information at the joints. This method eliminates the need for mapping between the teacher and the robot but generates less smooth trajectories.

In teaching-learning, the method of capturing human motions through mapping is often chosen for scenarios requiring high fidelity in humanoid motions of collaborative robots. This method provides more accurate teaching-learning data by capturing body movements.

For instance, in certain industries that require high precision in collaborative robot operations (such as the assembly of some precision components, or assisting with surgeries in the medical industry), accurate modeling of human motion is needed. The appropriate machine learning methods and execution strategies must be selected to enable robots to complete specified tasks. This necessitates acquiring high-precision human motion trajectory data.

The NOKOV optical 3D motion capture system has sub-millimeter precision and features high accuracy and low latency. It is widely used for acquiring teaching and learning data for collaborative robots. The optical motion capture system mainly consists of hardware components such as infrared motion capture cameras and computer software systems. During the motion capture process, multiple cameras emit infrared rays and continuously capture high-speed images of the markers. The movement trajectories of the markers are calculated from the continuous images, and the relevant data are transmitted to the computer. The computer then processes the corresponding grayscale images to obtain spatial coordinate information and other high-precision data.

NOKOV has established in-depth cooperation with multiple universities, providing high-precision motion capture for collaborative robots in various industries.

A team led by Professor Lou Yunjiang from Harbin Institute of Technology has proposed a teaching-learning method for collaborative robots to assemble 3C parts. This method uses a motion capture system covering a 1m*1m area, with six NOKOV infrared optical motion capture cameras capturing at a sampling rate of 340 fps. The cameras record the movement data of infrared-reflective markers attached to the operator's hands, thereby obtaining the 3D spatial positions of the hands and fingers. The optical motion capture system can operate in a typical industrial environment without interfering with the operator's normal assembly work or requiring excessive space and dense equipment distribution. After acquiring the teaching data, the system uses a series of data preprocessing techniques to obtain smooth assembly motion trajectories from multiple demonstrations. Finally, a strategy learning algorithm is applied to derive the strategies for the robot to regenerate the assembly motion trajectories, enabling the robot to perform assembly tasks from random start positions and orientations.

The team led by Professor Yang Dewei at Chongqing University of Posts and Telecommunications proposed a medical robot suture modeling method based on demonstration, decomposition, and modeling for uniform suturing techniques.

Uniform stitching modeling method is divided into the following four steps:

① Doctor demonstrates a suturing operation

② Research directions conducted using the Robotarium platform include formation control, path planning, bio-inspired swarm control, collision avoidance, and more.

③ Establish a parametric model for the initial and final states of the action primitives used for suturing.

④ The Dynamic Movement Primitives (DMP) method is used to model the dynamic sub-processes of suturing actions. A parametric model of the dynamic process of the suturing primitives, as well as the start and stop state parameters of the suturing primitives, make up a suturing skill library.

The experiment used seven NOKOV optical motion capture cameras to measure and track the entire process of a doctor's suturing. The objects being captured were two surgical forceps, each with three markers attached. The motion capture system captured the spatial coordinates of the markers to compute the continuous motion trajectory of the forceps (including position and posture), with the high-precision trajectory data (sub-millimeter level) used for procedural decomposition. The NOKOV motion capture system is characterized by its high precision and low latency. As collaborative robots become increasingly widespread in application, the NOKOV motion capture system is also expected to play a greater role.