In recent years, as robot research has continuously progressed, the functions that robots need to perform have become more numerous and their corresponding behaviors more complex. Simple motion control is no longer sufficient to meet these needs, especially for tasks where the motion pattern is difficult to capture using traditional control methods, which are essentially ineffective. This requires robots to possess higher learning capabilities, allowing for dynamic interaction with the environment to handle unknown situations.

Imitation learning involves the following process: a human demonstrates an action, a robot acquires the trajectory data, the learning model is used to understand the action, a control strategy is developed, and then the robot, acting as an effector, controls the movement to replicate the behavior, thus achieving motion skill learning.

https://www.youtube.com/watch?v=hD34o3DGYcw

Behavior acquisition - After obtaining the demonstration trajectory data, it first needs to be preprocessed, which includes motion segmentation, dimensionality reduction, filtering, feature extraction, etc. The preprocessed data is then used as the input for the learning model, preparing it for encoding.

Behavior encoding involves mapping observed demonstrated behaviors to the robot system, requiring an effective representation method with generalization capability and robustness. This allows the learned abilities to be applied in new environments with some resistance to interference.

Behavior reproduction - For robot imitation learning, it is necessary to evaluate metrics of imitation performance. Then, through underlying motion control, the learned control strategies are mapped onto the robot's actuator space to achieve true reproducibility.

Moreover, wide-angle lenses often experience image distortion at the edges, which can affect the accuracy of extracting 2D coordinates if the captured object is located at the edge.

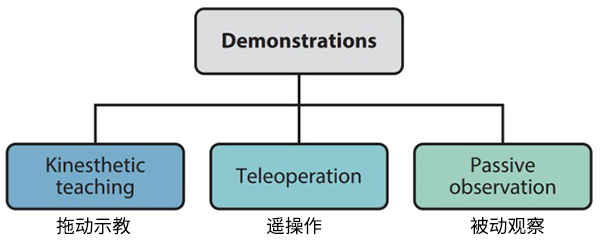

1) Teach-by-Demonstration (TbD). Teach-by-Demonstration involves an operator physically moving the robot to perform the desired action. The state information during the process, such as joint angles and torques, is recorded by the robot's onboard sensors, thus generating training data for machine learning models. This method is intuitive and has low user requirements, making it widely used in lightweight industrial robot scenarios. However, the quality of the demonstration depends on the flexibility and smoothness of the operator's movement, and even data captured by expert operators may require smoothing or other post-processing. Additionally, due to form factors, this method is effective for robotic arms but more challenging for other platforms such as legged robots or dexterous hands.

2) Teleoperation. Teleoperation is another method of demonstration that can be used for trajectory learning, task learning, grasping, or more advanced tasks. Teleoperation requires providing external inputs to the robot through joysticks, graphical interfaces, or other means. There are already various interactive devices available, such as haptic devices or VR interaction devices. Unlike lead-through teaching, teleoperation does not require the user to be in the same location as the robot and can be implemented remotely. The limitations of teleoperation include the need for additional work to develop input interfaces, a longer user training process, and the usability risks associated with external devices.

3) Passive observation. Passive observation primarily involves robots learning by observing the actions of demonstrators. Demonstrators complete tasks using their own bodies, and the motions (of either the demonstrators' bodies or the objects they manipulate) are captured by external devices. An effective method for capturing this data is passive optical motion capture. During this process, the robot does not participate in task execution but acts as a passive observer. This method is relatively easy for the demonstrator, as it requires no training for the demonstration process. It is also suitable for robots with multiple degrees of freedom and non-humanoid robots (scenarios where the use of a teach pendant is challenging). This approach requires mapping human actions to actions executable by robots, and difficulties include occlusions, rapid movements, and sensor noise encountered during the demonstration process.

https://www.youtube.com/watch?v=z8SfRrUvQ_4

The NOKOV motion capture system utilizes a passive infrared optical principle, capturing motion data by tracking reflective markers affixed to the human torso (or the target object being manipulated). The NOKOV system has high positioning accuracy, with static repeat precision reaching 0.037mm, absolute precision up to 0.087mm, linear dynamic trajectory error up to 0.2mm, and circular trajectory error of 0.22mm. Moreover, the NOKOV motion capture system can reach a sampling frequency of up to 380Hz at full resolution, meeting the needs for data collection during demonstrations of high-speed movements. NOKOV engineers have over 5 years of project experience and can provide customized solutions for different site conditions, effectively reducing experimental errors due to obstructions.

Currently, renowned institutions abroad that research imitation learning, such as the Learning Algorithms and Systems Laboratory (LASA) at the Swiss Federal Institute of Technology in Lausanne (EPFL), the Robotics Lab at the Italian Institute of Technology, and the team led by Prof. Jan Peters at the Technical University of Darmstadt in Germany, all utilize motion capture systems as a crucial means of acquiring demonstration trajectory data.

Examples of motion capture applications in teach and learn case studies

Harbin Institute of Technology Precision Control for 3C Assembly Tasks

Researchers have proposed an effective offline programming technique through imitation learning to automate 3C assembly lines. The process includes two stages. In the first stage, the NOKOV optical motion capture device is used to capture the hand pose information of a human during the assembly process. In the second stage, the demonstrated data is used to design robot control strategies. Initially, the demonstrated data is pre-processed using a density-based spatial clustering heuristic for trajectory segmentation and a local outlier factor-based anomaly detection algorithm. Subsequently, from the processed data, human assembly skills are learned through a probabilistic learning strategy based on Gaussian mixture models, thus enabling robots to complete the same assembly tasks in new environments.

Demonstration data are obtained using the NOKOV optical motion capture equipment. This platform can track three reflective markers attached to the operator's hand, offering a straightforward way to record manual assembly actions. It also proposes an iterative path optimization technique within the framework of reinforcement learning. The study demonstrates the effectiveness of the iterative path optimization strategy by showing a simple pick-and-place assembly path.

Chongqing University of Posts and Telecommunications - Modeling Method for Suture Skills in Surgery Robots Based on Imitation Learning

Surgical assistant robots help overcome challenges faced by surgeons during traditional surgeries, including precision, workspace, distance, and collaboration difficulties. A foundational task for achieving high-quality automated operations akin to a physician is establishing a surgical operation model.

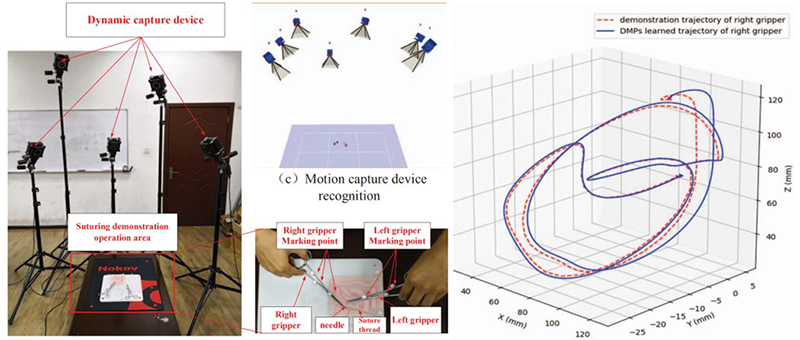

The team led by Professor Yang Dewei at Chongqing University of Posts and Telecommunications has conducted suturing skill learning and modeling research, taking superficial tissue suturing as a modeling object. They established a suturing demonstration data collection system to capture data during the demonstration of suturing procedures by doctors.

The system includes a NOKOV motion capture system, surgical forceps, sutures, threads, and wound models. The trajectory data captured by the motion capture system is divided into several dynamic processes according to the DMPs (Dynamic Movement Primitives) method. The DMPs model is trained using the operator's trajectory data to ultimately verify the model's capacity for simulating the suturing process and its adaptability to new scenarios.

Wuhan University - Trajectory Prediction

Research related to trajectory prediction at Wuhan University focuses on spherical flying objects. This research includes real-time identification, positioning, and trajectory prediction of the moving targets. By constructing and training an LSTM network model, the research successfully addresses the identification, positioning, and trajectory prediction issues of spherical flying objects.

The experiment uses the Kinect depth camera and an 8-camera NOKOV motion capture system to build the system hardware platform and employs the ROS system as the software platform for the robot. The system is calibrated, including the intrinsic calibration of the Kinect depth camera and the joint calibration of the Kinect and NOKOV motion capture systems.

Motion target identification employs a background subtraction method based on a mixed Gaussian model. This method utilises phase space positioning to obtain point cloud information corresponding to pixel points, applies the Gauss-Newton method to fit the centroid of the point cloud to obtain spatial coordinates of the moving target, and utilizes Kalman filtering to optimize the motion trajectory of the centroid.

In experiments, researchers employed an RNN-based method for predicting the motion trajectory of targets, using the NOKOV motion capture system to collect 1000 complete motion trajectories. They used 80% of these trajectory sequences for training and the remaining 20% for testing. The network they built was then applied to predict the trajectory of an irregularly moving target, a ping-pong paddle, proving the network's generalization capability.

References:

[1] Yu Jianjun, Men Yusen, Ruan Xiaogang, Xu Congchi. The application of imitation learning in the study of robot bionic mechanisms [J]. Journal of Beijing University of Technology, 2016, 42(02): 210-216.

[2] Recent Advances in Robot Learning fromDemonstration Harish Ravichandar, Athanasios S. Polydoros, Sonia Chernova, AudeBillard Annual Review of Control, Robotics, andAutonomous Systems 2020 3:1, 297-330.

[3] H. Hu, Z. Cao, X. Yang, H. Xiong and Y.Lou, "Performance Evaluation of Optical Motion Capture Sensors forAssembly Motion Capturing," in IEEE Access, vol. 9, pp. 61444-61454, 2021,doi: 10.1109/ACCESS.2021.3074260.

[4] Z. Zhao, H. Hu, X. Yang and Y. Lou,"A Robot Programming by Demonstration Method for Precise Manipulation in3C Assembly," 2019 WRC Symposium on Advanced Robotics and Automation (WRCSARA), 2019, pp. 172-177, doi: 10.1109/WRC-SARA.2019.8931947.

[5] D. Yang, Q. Lv, G. Liao, K. Zheng, J.Luo and B. Wei, "Learning from Demonstration: Dynamical MovementPrimitives Based Reusable Suturing Skill Modelling Method," 2018 ChineseAutomation Congress (CAC), 2018, pp. 4252-4257, doi: 10.1109/CAC.2018.8623781.

[6] Yang Minghui. Motion Target Trajectory Prediction based on Recurrent Neural Network [D]. Wuhan University, 2019.