In modern warfare, unmanned systems such as drones, unmanned vehicles, and unmanned boats are gradually being deployed on the battlefield. However, individual unmanned systems have limitations in terms of payload and task capability, as well as a singular combat capability. To compensate for the limitations of individual capabilities and simultaneously enhance their adaptability to combat missions, unmanned systems can be deployed in a swarm. Swarming allows for the full utilization of their advantages, such as a wide monitoring range and strong resilience to damage, endowing them with the ability to independently complete complex missions and to achieve cross-domain swarm operations.

Swarm intelligence coordination in unmanned systems refers to the formation of several unmanned systems performing the same task under unified command and maintaining visual or tactical contact. Swarm intelligence coordination technology achieves efficient collaboration among the nodes within the swarm through cooperative perception, joint decision-making and planning, and coordinated formation control, improving adaptability to complex environments and multitasking.

NOKOV achieves sub-millimeter level real-time positioning for multiple unmanned systems

To verify the reliability of swarm intelligence coordination systems, platforms such as drone, unmanned vehicle, and unmanned vessel formations are typically designed for efficacy validation. These platforms systematically verify technologies for collaborative perception, decision-making, and time-varying formation control. They usually consist of a network communication subsystem, a multi-agent subsystem, and a composite positioning subsystem, with the motion capture system being the primary positioning method within the composite positioning subsystem.

When conducting collaborative decision-making and formation control verification, autonomous control of individual units must first be achieved. To simplify the experiment, the NOKOV motion capture system can be used to simultaneously locate multiple unmanned systems, with the captured position, velocity, and acceleration data serving as input signals for the control system. Taking drones as an example, the trajectory tracking control of a drone includes position control, attitude estimation, and attitude control. A typical position control utilizes a position-linear velocity cascade controller, with the controller's output being the acceleration in the NOKOV motion capture system's coordinate frame.Pose SolutionThe module translates accelerometer data from the NOKOV motion capture system's coordinate system to the Earth's coordinate system, and then to the body coordinate system as reference acceleration. For attitude control, the acceleration is decomposed to solve for reference attitude angles, which are used as inputs for controlling drones to reach specified locations.

In distributed cluster cooperative formation control, it is necessary to complete the trajectory planning for the leader, with followers tracking the leader's actual position and maintaining a consistent relative position to keep the formation shape. In this process, an unmanned system unit only needs to exchange information with adjacent units. The leader must produce the reference trajectory and perform position control to precisely track the desired trajectory. The real-time position and orientation of the leader can be directly obtained through the NOKOV motion capture system and transmitted to the formation keeper. The other units calculate the control force based on relative positions to maintain the cluster formation shape.

When the model is limited by its payload, the formation control and motion control algorithms for unmanned system verification experiments (taking unmanned boats as an example) can be implemented directly on the host computer. The data acquired by the NOKOV motion capture system are transmitted to the host computer, and after processing, are used as control commands for the unmanned boats. The main function of the unmanned boats is to receive control inputs from the workstation and drive two propellers to achieve the corresponding speeds. Although each model boat's autonomy is low and fully distributed formation is difficult to achieve, this centralized platform is conducive to the rapid implementation of distributed formation algorithms and motion control algorithms. The NOKOV motion capture workstation broadcasts data externally, eliminating the need to consider the effects of communication delays and packet loss. The platform can also simulate communication problems like packet loss and delays directly.

Furthermore, for unmanned systems using onboard sensors for positioning and formation flying, when verifying the effectiveness of swarm intelligence and collaboration technologies, it is necessary to analyze the error between the estimated motion trajectories of drones, unmanned vehicles, and unmanned boats and their actual motion trajectories. High-precision actual trajectories, commonly referred to as "ground truth," are typically required. Since motion capture systems can achieve sub-millimeter level accuracy and synchronously capture multiple unmanned systems' trajectories, whereas onboard positioning sensors like SLAM systems have errors at least on the centimeter scale, using motion capture systems as "ground truth" is reliable.

NOKOV motion capture used for collaborative case studies in swarm intelligence

NOKOV Motion Capture collaborates with multiple universities and research institutions in the development of cluster intelligence cooperative technology verification platforms, serving as an indoor positioning solution within their systems.

Beijing Institute of Technology's UAV/UAV heterogeneous swarm collaboration

Cluster intelligent collaborative control systems use consensus algorithms to complete coordinated air-ground patrol missions, employing the flexibility of unmanned vehicles to carry out encirclement tasks centered on enemy targets, resembling a satellite-like surround strategy. Based on the broad vision of drones and the high maneuverability of unmanned vehicles, drones can quickly relay located targets to unmanned vehicles, which then initiate a targeted encirclement upon receiving the information.

The combined positioning system in the setup mainly uses an external NOKOV optical motion capture system for primary positioning and correction, complemented by onboard inertial devices, forming a multi-agent combined positioning system. The positioning of drones can utilize a combination of external optical motion capture, inertial navigation, and visual navigation; while unmanned vehicles rely primarily on optical positioning data and turn to inertial device's "backup" pose information when optical data is lost, ensuring continuous operation.

Collaborative obstacle avoidance for unmanned vehicles from the Institute of Automation, Chinese Academy of Sciences

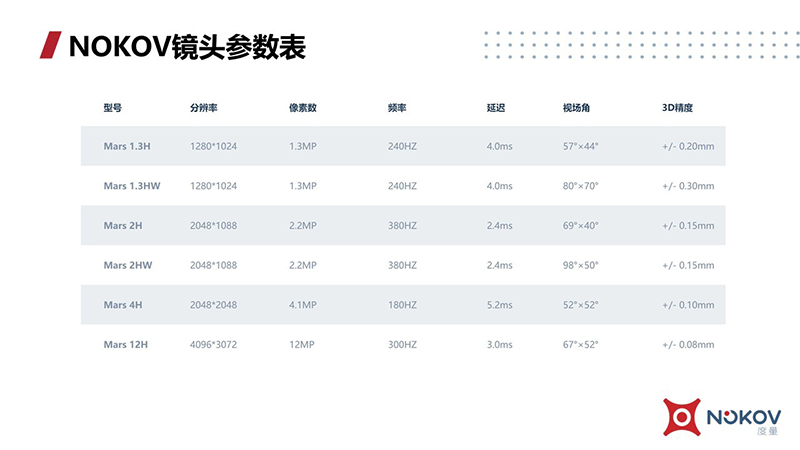

The team led by Professor Pu Zhiqiang from the Institute of Automation, Chinese Academy of Sciences, has researched and developed an intelligent unmanned cluster system. This unmanned cluster system is divided into three subsystems: the positioning subsystem, the communication subsystem, and the control subsystem. The positioning system integrates the NOKOV optical motion capture system with onboard inertial units for positioning. Considering the capture range, a total of 24 Mars2H optical positioning cameras were set up, including 8 cameras arranged at a height of 5 meters and 16 cameras at 8.5 meters, covering a space of 12m*12m*8.5m.

During experiments, reflective markers are placed on each unmanned vehicle and obstacle. Different arrangements of these markers are used to distinguish the individual IDs of the robots. By capturing the reflective markers on the unmanned vehicles and obstacles, the 3D coordinates of the markers are obtained and then broadcast in real-time using an SDK. A single unmanned vehicle can receive position information about itself, nearby unmanned vehicles, and obstacles with sub-millimeter accuracy.