Lower limb exoskeleton robots are wearable devices that integrate human biomechanics with mechanical characteristics. They involve disciplines such as robotics, ergonomics, control theory, sensor technology, and information processing technology, representing an integration of various advanced technologies. Lower limb exoskeletons can restore walking ability to people with mobility impairments and provide enhanced physical strength to support larger loads, offering significant application value.

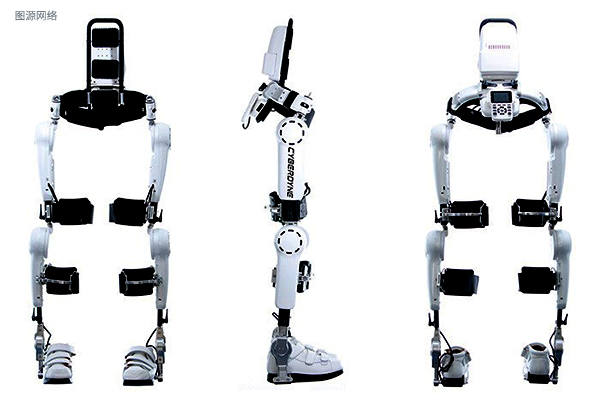

Lower limb exoskeleton robots can be categorized by function into rehabilitation exoskeletons and power-augmenting exoskeletons. The mainstream lower limb exoskeleton robots internationally include Israel's Rewalk, Japan's HAL, and the United States' EKSO GT, among others, but they are relatively expensive. In recent years, domestic research on exoskeletons has gradually developed and is receiving more attention from scientific research institutions and the market.

Human motion mechanism research is the foundation for lower limb exoskeleton development

A lower limb exoskeleton system typically consists of four main components: biomimetic mechanical legs; a power source and actuators; sensors; and a controller. Its development process encompasses key technologies such as the biomechanics of human lower limb motion, kinematics and dynamics of exoskeleton mechanisms, detection of movement status and recognition of human motion intention, motion planning, and control of the drive system.

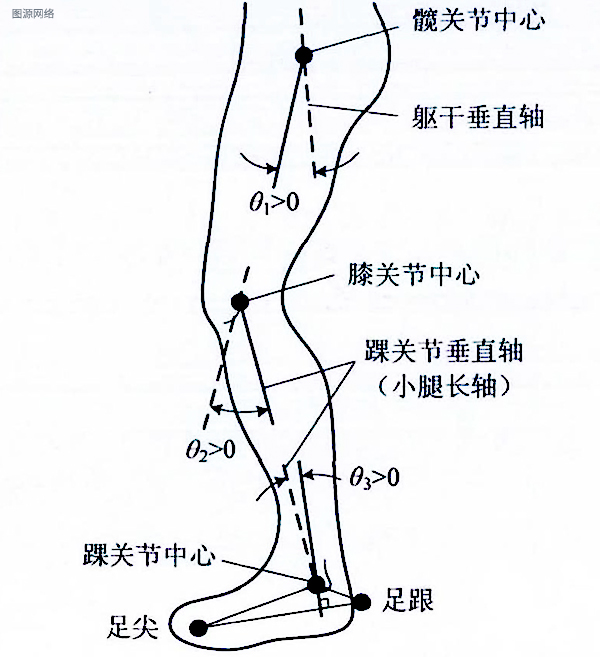

During the use of lower limb exoskeletons, it is necessary to maintain synchronized and coordinated movement with the human legs. Therefore, research into the lower limb walking mechanism is required for leg joint degree of freedom setup, drive configuration for each degree of freedom, actuator selection, layout of various sensors detecting the human-machine motion state, and recognition of human motion intent based on sensor signals.

Furthermore, to allow lower-limb exoskeletons to have a wider range of settings for load, walking speed, and stride length, and to be applicable in different walking scenarios such as flat ground, slopes, and stairs, research into walking excitation for lower limbs is necessary. This requires conducting human walking experiments under various gait conditions to obtain kinematic data for the lower limb joints in different working conditions and to perform dynamic analysis and modeling of the designed lower-limb exoskeleton mechanisms.

NOKOV motion capture accurately captures human motion data

In common lower limb movements such as walking, squatting, standing, and climbing stairs, the entire lower limb structure is involved. These common actions are also the movements that lower limb exoskeleton robots need to perform.

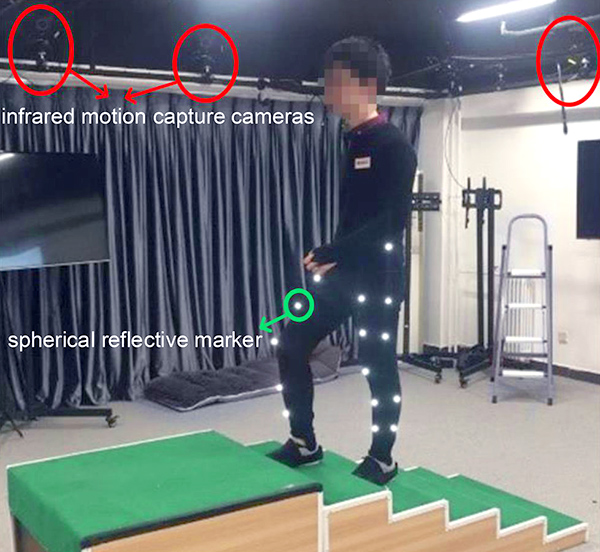

The key to collecting kinematic data is to capture high-precision motion data of the human body in 3D space. The NOKOV motion capture system operates on a passive optical principle, utilizing motion capture cameras arranged around the field to capture and locate the real-time position of targets in 3D space. It features high positioning accuracy (sub-millimeter level), high sampling frequency (up to 380Hz), and markers that do not interfere with human motion. The data collected includes the real-time motion angles, angular velocities, and angular accelerations of human lower limb joints, as well as the movement trajectories, velocities, and accelerations of the centers of mass of various body parts.

Facilities often include a walkway, force platform, and stairs at the center. Together with the force platform, not only can kinematic data like joint angles and angular velocities be collected, but also kinetic data such as joint torques and power based on the human biomechanical model and collected kinematic data.

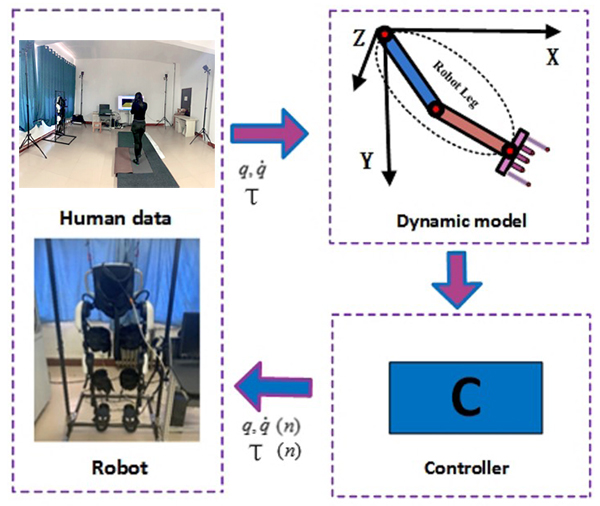

The human motion data collected under different working conditions can not only guide the design of the bionic structures of lower limb exoskeletons but also serve as the foundation for the study of the dynamics of exoskeleton robots.

Besides the need for human motion data in structural design, when using lower limb exoskeletons for assistive training, normal gait data from healthy individuals is collected using the NOKOV motion capture system and transmitted to the exoskeleton robot. The exoskeleton robot then tracks this normal gait trajectory to achieve the goal of rehabilitating patients.

NOKOV Motion Capture Application Examples

In the development and research of exoskeleton robots, many research groups choose the NOKOV motion capture system for gait data collection & performance verification. Below are some customer case studies.

Southern University of Science and Technology

Professor Fu Chenglong's Human Augmentation Robotics Laboratory at Southern University of Science and Technology primarily focuses on the research of biomechatronic wearable robots. Researchers synchronize the NOKOV optical 3D motion capture system with a 3D force measurement platform, surface electromyography, and plantar pressure measurement devices to acquire a person's six degrees of freedom (6DoF) motion trajectory and kinematic parameters for precise gait analysis. This serves as foundational data for motion and posture planning and addresses compatibility issues.

Beihang University (Beijing University of Aeronautics and Astronautics)

Professor Chen Weihai's team at Beihang University has developed an adaptive gait generation algorithm for lower limb exoskeletons climbing stairs based on a depth camera, building on methods for visual stair perception and stair ascent gait generation, tailored for the typical scenario of stair climbing.

To gather a natural set of stair-walking gait patterns for reference, Professor Chen's team utilized the NOKOV motion capture system—comprising 16 motion capture cameras, a motion capture suit with reflective markers, and motion analysis software—to collect a series of lower limb movement data from healthy individuals walking on flat ground and stairs. The motion capture cameras tracked the reflective markers on the lower limbs, and the system calculated the 3D coordinates of each marker. The motion analysis software then mapped each marker to specific locations on a human body model, forming a complete lower limb joint structure. In the study, the hip and knee joint angles captured by the motion capture system were used to represent the lower limb walking gait.

Original Text Linkhttps://aip.scitation.org/doi/abs/10.1063/1.5109741

Zhongyuan University of Technology

The team led by Professor Wang Ai-Hui from Zhongyuan University of Technology has proposed a control method for patients suffering from lower limb motor disorders due to central nervous system diseases like stroke. This method uses gait data from healthy individuals as control input and designs a robust adaptive PD controller, enabling lower limb rehabilitation robots to follow the gait trajectory of healthy individuals for rehabilitation training.

Researchers used the NOKOV motion capture system to collect human gait data, obtaining real-time spatial positions of joints by capturing reflective markers placed on human joints. After fitting and filtering the data, the joint angles of the lower limb hip and knee joints can be calculated through inverse kinematics, serving as data input for the exoskeleton robot controller.

Experiments have shown that the hip and knee joints of lower limb rehabilitation robots can quickly follow the desired angles after initial minor fluctuations, and the errors in joint angles and speeds can rapidly converge to zero. The initial small fluctuations in joint positions are also within the safe range of joint motion, ensuring the safety and stability of the system. This also verifies the safety and feasibility of the proposed algorithm. A similarity function confirms that the robot's motion trajectory closely resembles human movement, objectively indicating high comfort during human-robot interaction.

Original Text Linkhttps://peerj.com/articles/cs-394/