In the 2020 Tokyo Olympic Games, the application of all kinds of high-tech attracted attention. For example, the power exoskeleton robot used by Olympic staff to carry heavy objects can effectively reduce the injury caused by gravity pressure when carrying heavy objects.

In recent years, upper limb exoskeleton robots have attracted extensive attention. In addition to improving worker mobility and efficiency, the robotic exoskeletons can also be worn on the limbs of people with movement disorders and provide assistance to joints. The use of exoskeleton to achieve auxiliary sports training can replace physiotherapists to provide sports rehabilitation training services for patients, and record rehabilitation treatment parameters to improve the efficiency and pertinence of rehabilitation training. The exoskeleton robot for upper limb rehabilitation training can be worn on the human arm to provide torque for the human arm joints.

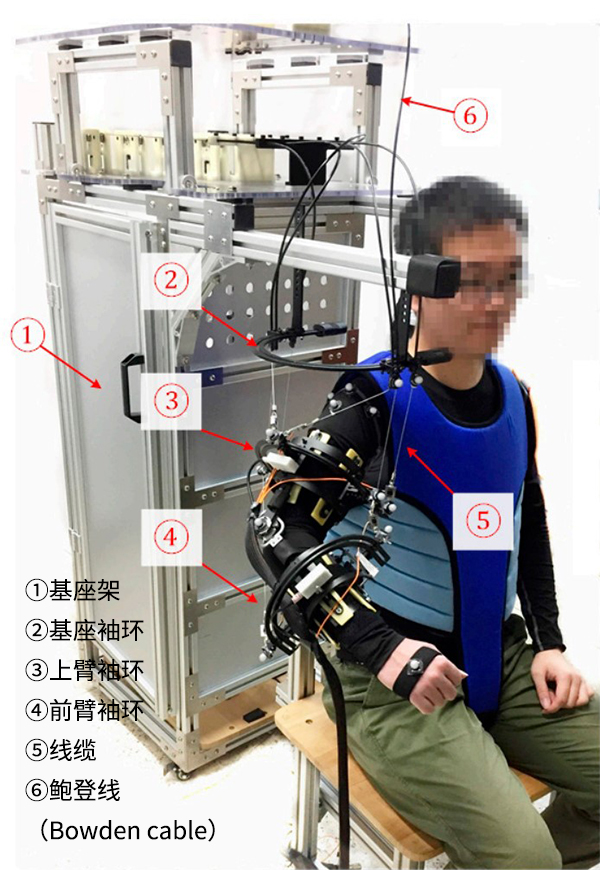

In order to improve the limb exoskeleton robot motion control robustness and accuracy, guarantee adaptability and comfort at the same time, the researchers from Beihang University have developed a cable-driven arm exoskeleton, the exoskeleton has special machinery design, with a special sleeve ring device can improve the exoskeleton and adaptability between human arms. The researchers established a kinematic model of the exoskeleton and improved the accuracy of the model by iteratively identifying uncertain parameters and by reducing uncertainties in the kinematics of the human arm bone and the attachment of the exoskeleton to the upper limb.

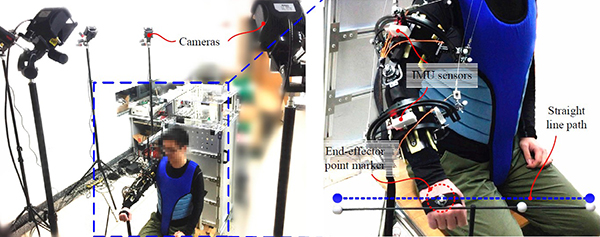

To verify the accuracy of the method for identifying uncertain parameters, including attachment error of shoulder and elbow center, upper arm and forearm cuff, the researchers developed a prototype exoskeleton and performed motion tracking experiments using the NOKOV motion capture system. In the experiment, subjects were asked to wear the exoskeleton and sit in a chair. The task was to move their right upper limb to follow a straight path four times (move the arm in a straight line along the t-bar).

One marker was installed on the hand of the subject to obtain its tracking results in the experiment. Fourteen markers were installed on the cable wiring points of the sleeve band of the exoskeleton robot to capture and track the changes in the cable length of the exoskeleton (the arm movement was evaluated by the cable length). A marker attached to the subject's shoulder joint records the movement of the joint center. Since the NOKOV motion capture system has a sub-millimeter capture accuracy, the measurement results are regarded as the real results of the experiment. The motion capture results were compared with the recognition results to verify the accuracy of the kinematic model.

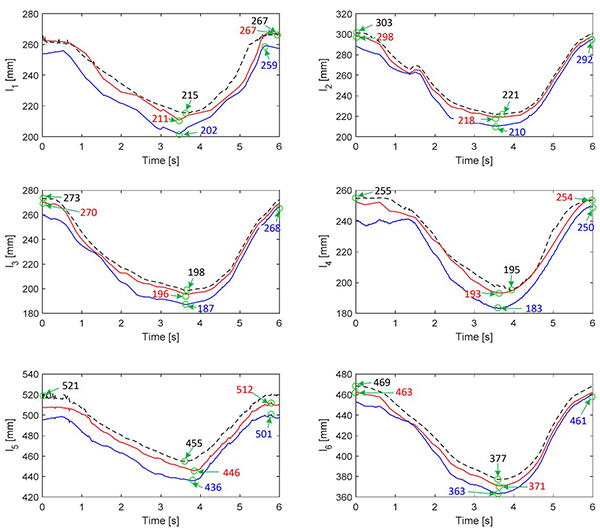

The figure below shows the change in cable length during the experiment. As shown in the figure, the calculated results of the kinematic model with parameter recognition (solid red line) are closer to the real values obtained by NOKOV motion capture system (dashed line) than the calculated results without recognition (solid blue line). By calculating the RMS error between motion capture results and recognized/unrecognized results, it is proved that the method of identifying uncertain parameters can effectively improve the kinematics model.

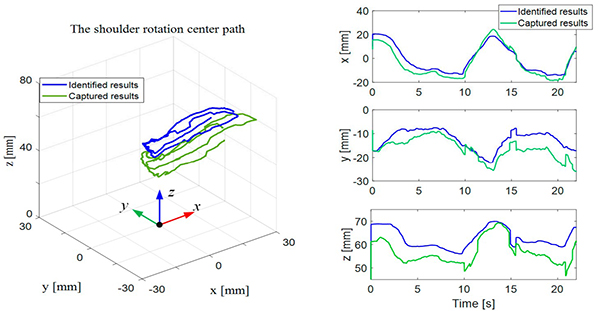

The diagram below shows the central position of human shoulder joint changing with body movement. The solid green line represents the result of shoulder center motion track measured by NOKOV motion capture system, and the blue curve represents the identification result of shoulder center parameters. As shown in the figure, the recognition results are basically consistent with those measured by the motion capture system, which proves the good prediction ability of the proposed method for human shoulder joint movement.

Bibliography: