Abstract

Accurate 6D pose tracking of objects is crucial in robotic manipulation tasks, particularly in complex assembly scenarios such as pin insertion. Traditional visual tracking methods are often limited by occlusions and noise in visual information, especially in scenarios involving robotic hand operations. This paper presents a novel method, TEG-Track, proposed by the team led by Professor Yi Li at the Institute for Interdisciplinary Information Sciences, Tsinghua University, and published in RA-L. TEG-Track enhances the performance of generalizable 6D pose tracking by integrating tactile sensing, and the team has constructed the first fully annotated visual-tactile dataset for in-hand object pose tracking in real-world scenarios. The data acquisition system includes the NOKOV motion capture system.

Code and Dataset: https://github.com/leolyliu/TEG-Track

Article: https://ieeexplore.ieee.org/document/10333330/

Citation

Y. Liu et al., "Enhancing Generalizable 6D Pose Tracking of an In-Hand Object With Tactile Sensing," in IEEE Robotics and Automation Letters, vol. 9, no. 2, pp. 1106-1113, Feb. 2024, doi: 10.1109/LRA.2023.3337690.

Research Background

The reliability of robotic manipulation depends on the accurate perception of the motion state of objects held in hand. Existing 6D pose tracking methods typically rely on RGB-D visual data, which perform poorly in scenarios involving occlusion and environmental collisions. In contrast, tactile sensors can directly sense the geometry and motion information of the contact area, providing additional auxiliary signals for tracking.

System Framework

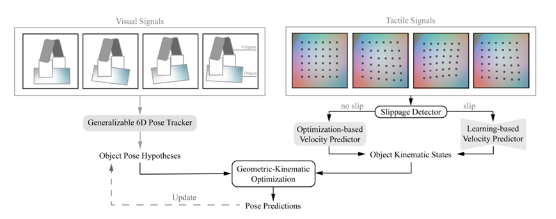

The core of TEG-Track lies in using tactile kinematic cues to enhance visual pose trackers through a geometric motion optimization strategy.

Dataset

The synthetic dataset includes object instances of various geometric shapes selected from the ShapeNet dataset, while the real-world dataset comprises 200 videos covering 17 different objects across 5 categories. The data acquisition system consists of a robotic arm, tactile sensors, RGB-D sensors, the NOKOV motion capture system, and objects.

Experimental Results

The experiments compared the performance improvement of TEG-Track on three types of visual trackers: keypoint-based (BundleTrack), regression-based (CAPTRA), and template-based (ShapeAlign). Results indicate that TEG-Track reduced the average rotation error by 21.4% and the translation error by 30.9% in real data.

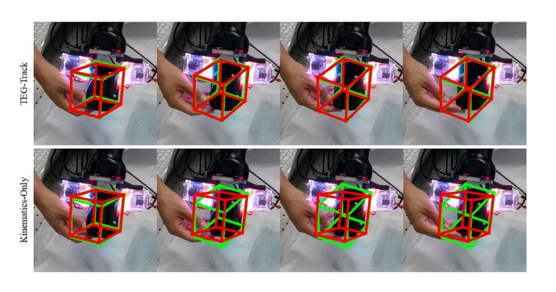

Qualitative results of long-distance trajectories in real data show red and green boxes representing the predicted and true poses of the object in hand, respectively.

By simulating tactile noise patterns, TEG-Track's performance under different quality tactile signals was tested, showing greater stability and robustness compared to baseline methods relying solely on tactile or visual inputs.

TEG-Track achieved a processing speed of 20 frames per second in multi-frame optimization scenarios, with low additional computational cost, making it suitable for real-time applications.

The NOKOV motion capture system is employed to obtain the true pose information of objects for comparison with predicted poses.