Research team from Southern University of Science and Technology published a paper titled "Proprioceptive State Estimation for Amphibious Tactile Sensing" in the journal of IEEE T-RO. This article proposed a novel vision-based proprioception approach that combines a high-frame-rate in-finger camera and a volumetric discretized model of the soft finger to estimate the state of the soft robotic finger in real-time and with high fidelity. The approach is benchmarked using NOKOV motion capture system and a haptic device. Both results show state-of-the-art accuracies. Moreover, the approach demonstrated robustness in both terrestrial and aquatic environments.

Citation:

N. Guo et al., "Proprioceptive State Estimation for Amphibious Tactile Sensing," in IEEE Transactions on Robotics, vol. 40, pp. 4684-4698, 2024, doi: 10.1109/TRO.2024.3463509.

Background:

The main approaches to proprioceptive state estimation (PropSE) in soft robotics include Point-wise Sensing, Bio-inspired Sparse Sensing Array, and Visuo-Tactile Dense Image Sensing. Visual tactile sensing is an emerging approach that leverages modern imaging technology to track the deformation of soft materials, thereby increasing sensing resolution. However, this approach still has challenges, including difficulties in visual sensing in aquatic environments, the high computational cost of soft robots limiting real-time perception, and issues with sensor adaptability and calibration in amphibious environments. Additionally, the flexible and deformable nature of soft robots makes traditional position and orientation sensing challenging, especially during dynamic interactions and transitions between different media. Therefore, developing a tactile sensing approach adapted to amphibious applications is a challenge.

Contributions:

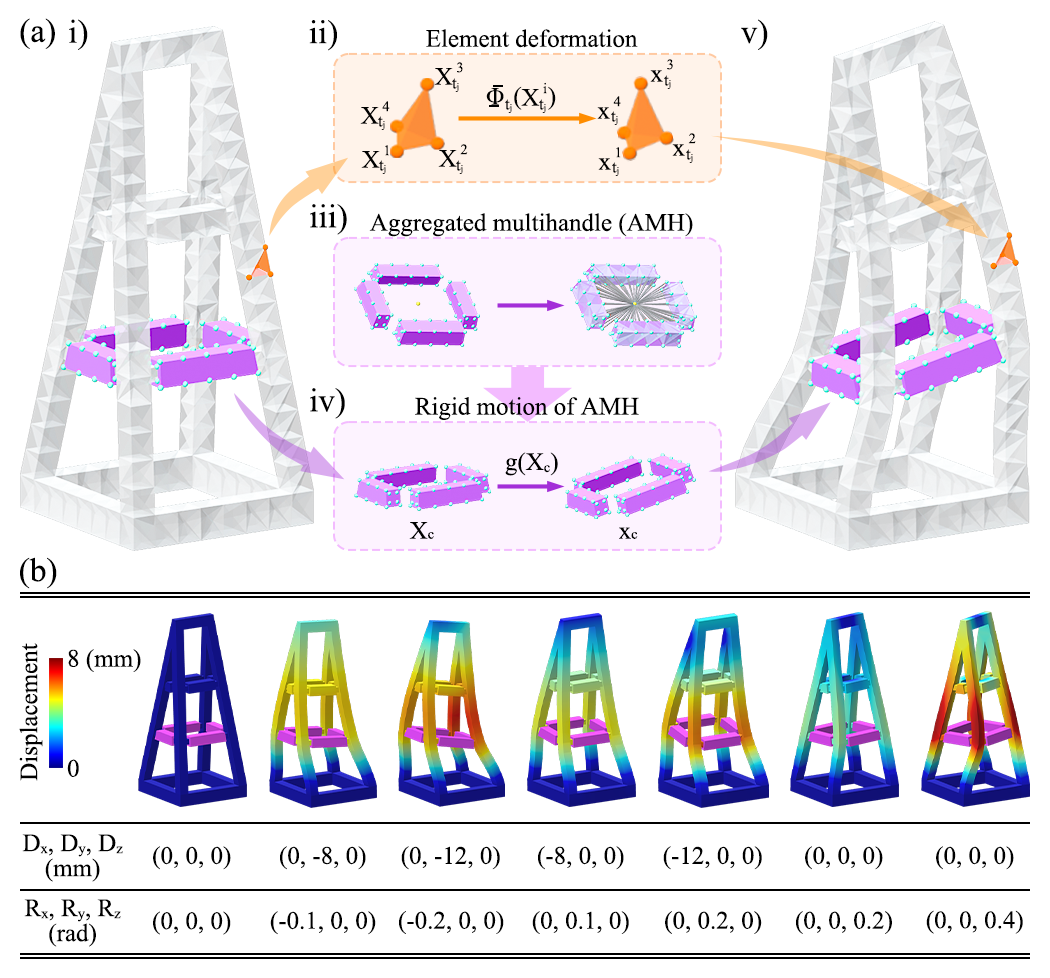

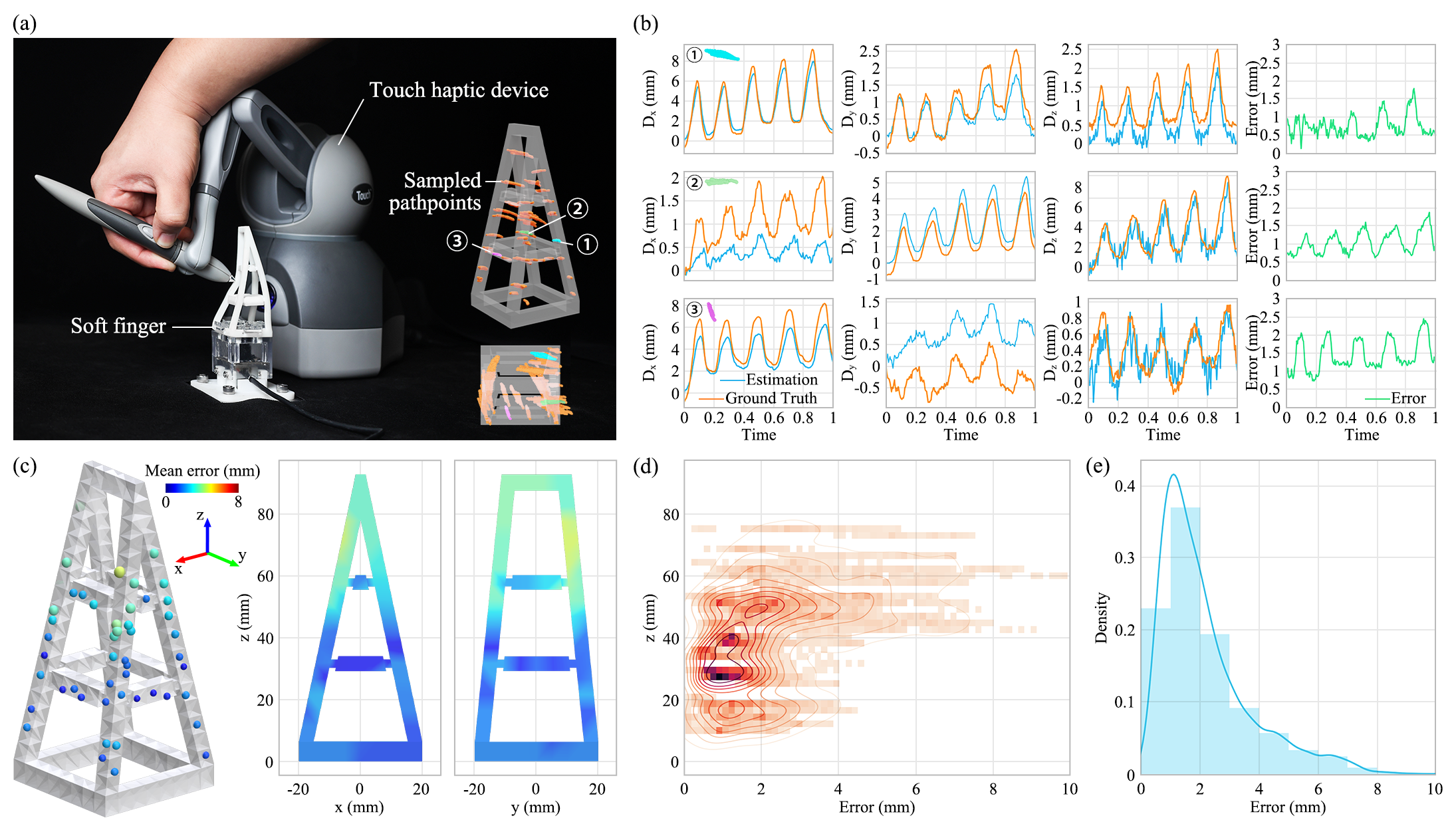

This article presents a novel vision-based proprioception approach for estimating and reconstructing tactile interactions of a soft robotic finger in both terrestrial and aquatic environments. The system utilizes a unique metamaterial structure and a high-frame-rate camera to capture finger deformation in real-time, and optimizes the estimation of the deformed shape through a volumetric discretized model and geometric constraint optimization, demonstrating high accuracy and robustness in both land and underwater environments.

Experiments and Results:

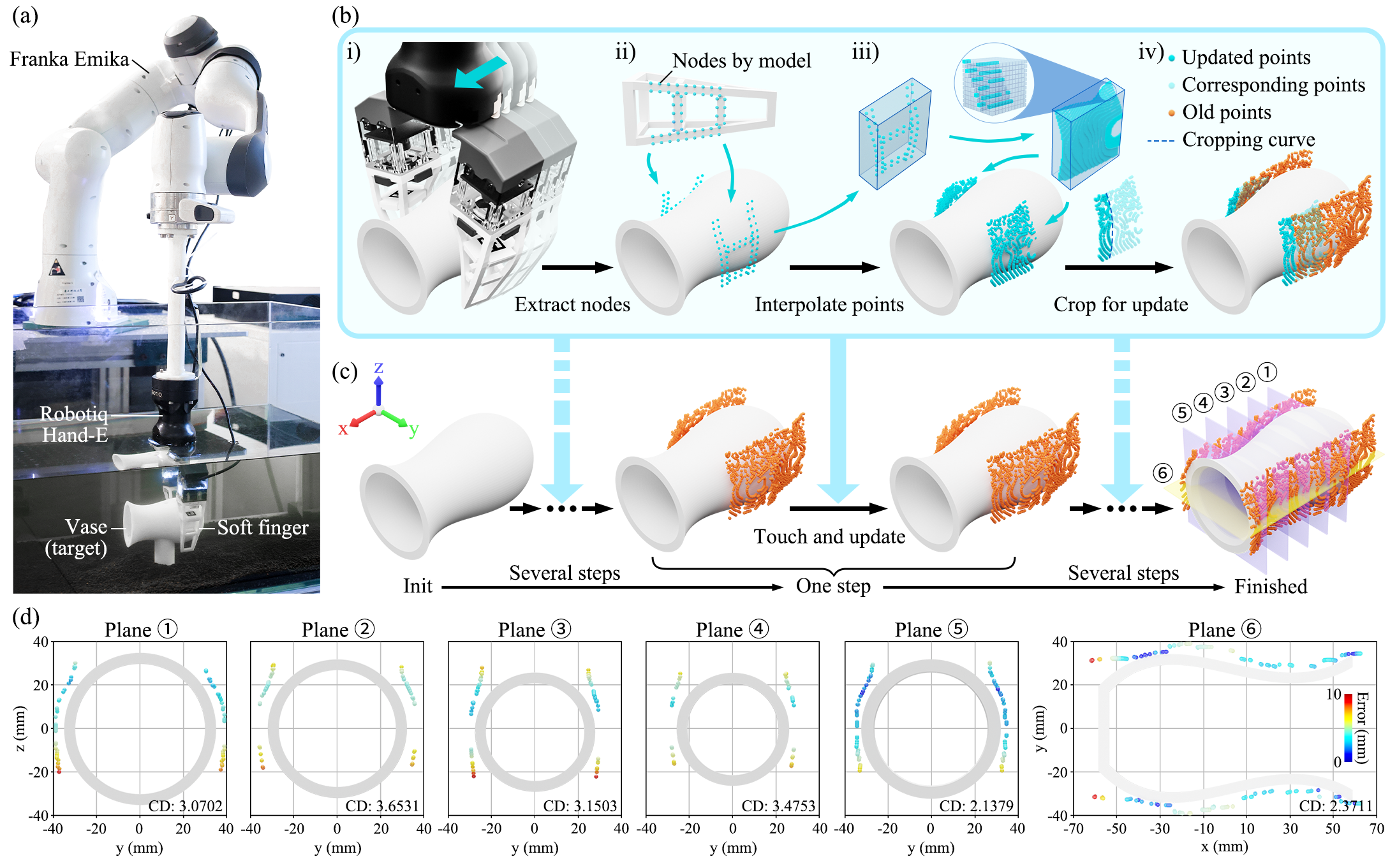

1、Experiment Preparation: Constructed soft robotic fingers, integrated high-frame-rate cameras, and adopted volumetric discretized models to simulate finger deformation.

2、Deformation Estimation:

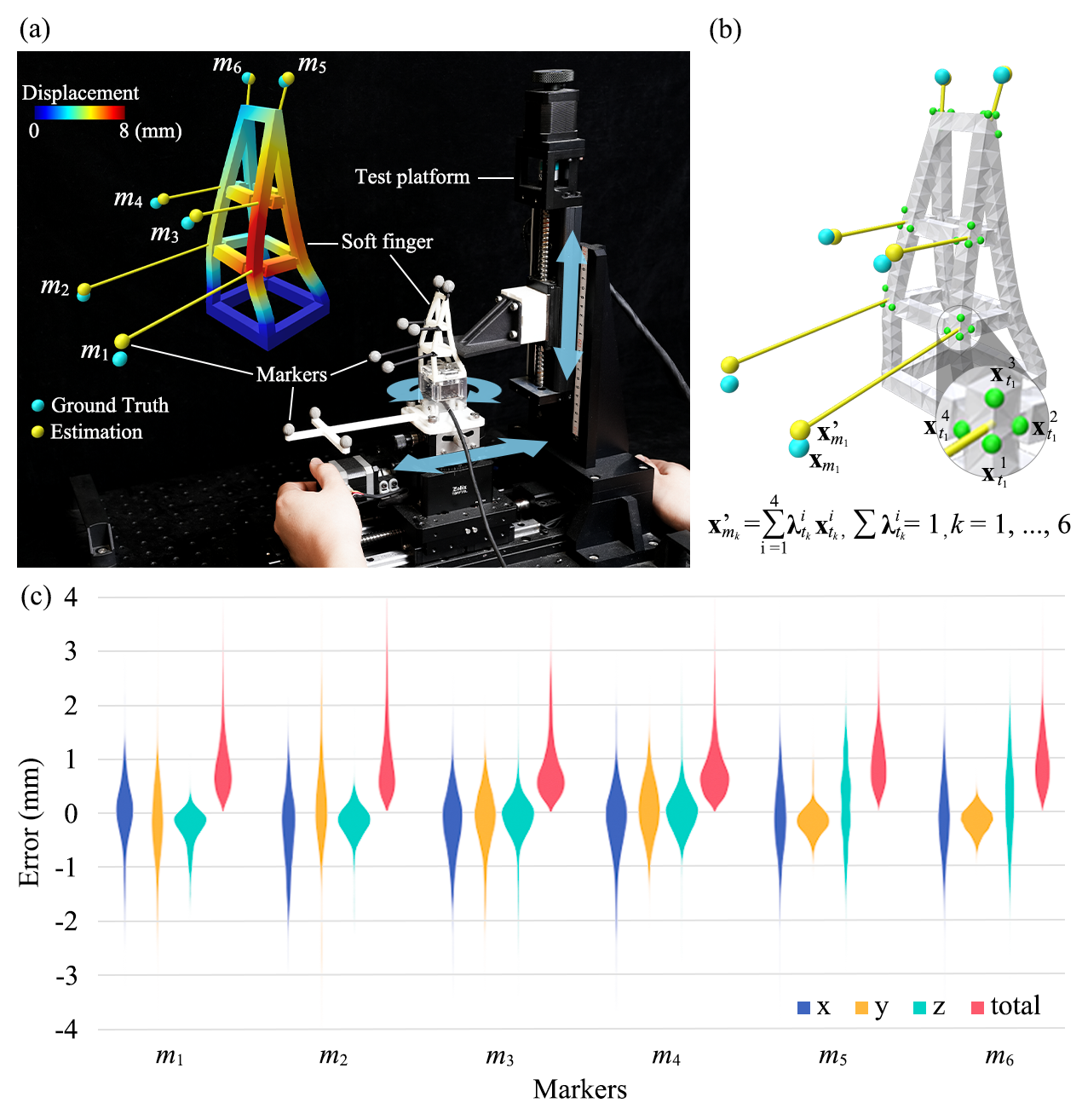

NOKOV motion capture system and touch haptic devices were used to benchmark the proposed approach, with results showing that the accuracy of the approach is state-of-the-art, with a median error of 1.96 mm for overall body deformation, corresponding to 2.1% of the finger’s length.

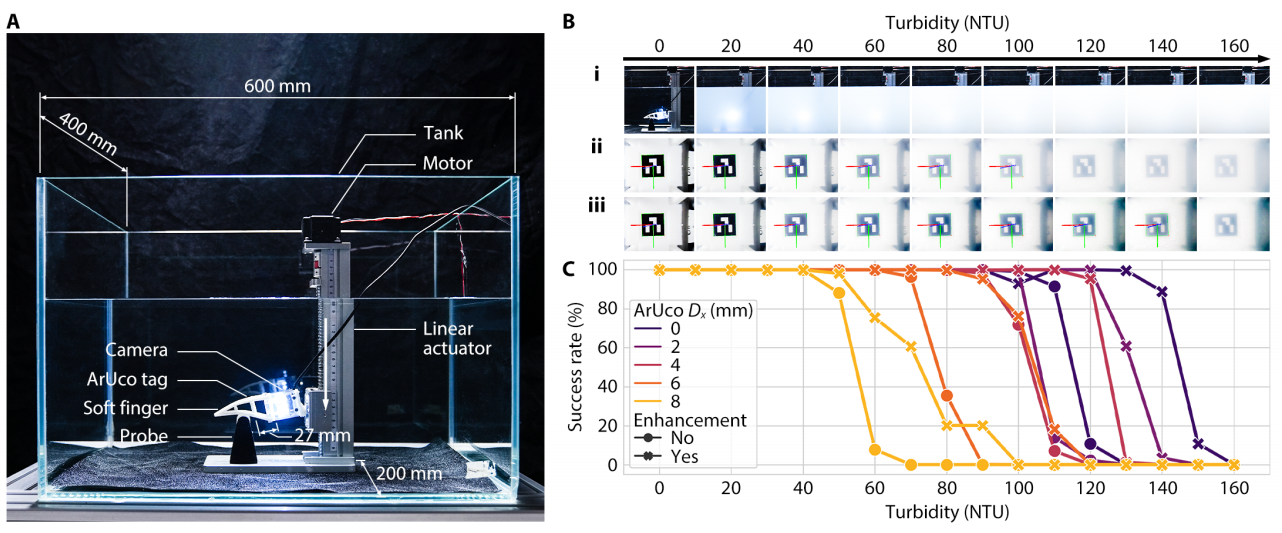

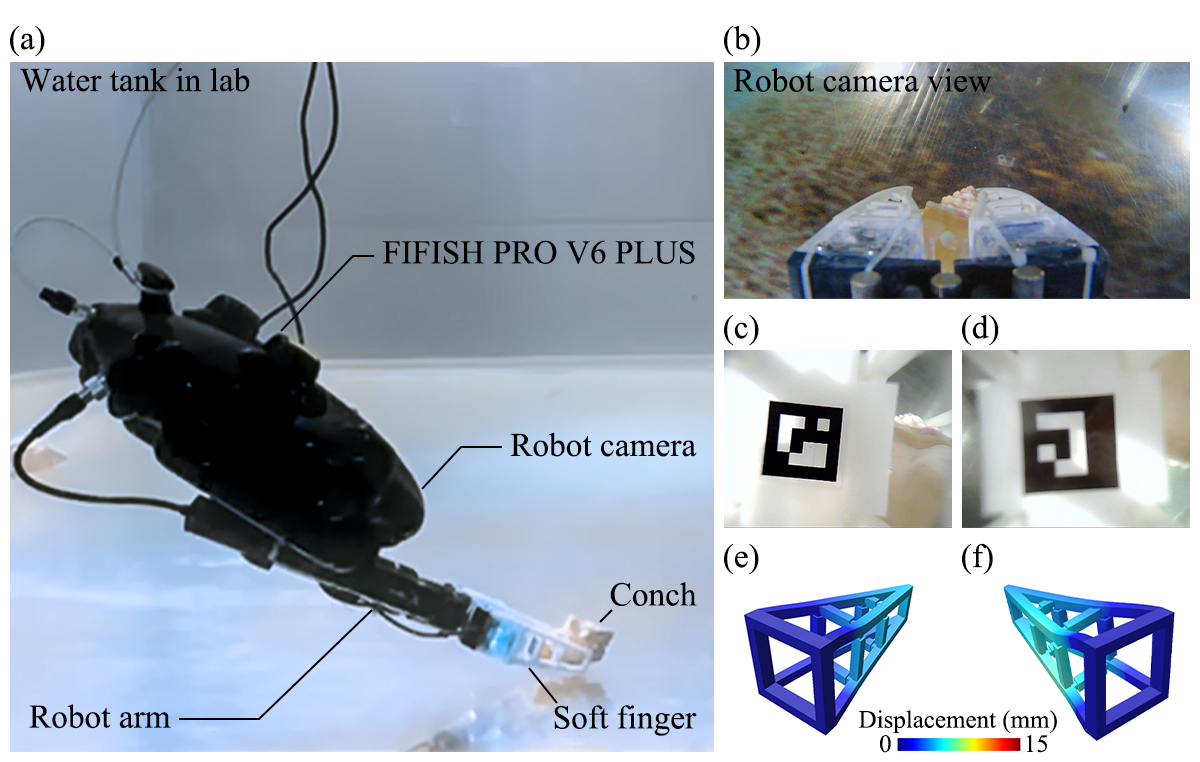

3、Turbidity Benchmarking: Tested the recognition capability of the vision system under different turbidity conditions, with results showing that the system can achieve a 100% marker recognition success rate in the turbidity range of 0 to 40 NTU, and image enhancement techniques can increase the recognition threshold to 100 NTU.

4、Shape Reconstruction: Conducted underwater object shape reconstruction experiments using soft fingers, demonstrating the effectiveness of the approach in local surface shape reconstruction.

5、Underwater ROV Grasping Experiment: Installed soft fingers on underwater remotely operated vehicles (ROVs) to verify the effectiveness of the approach in actual underwater operations.

The experimental results confirm the accuracy and robustness of the proposed approach and showcase its potential in amphibious environments.

NOKOV motion capture system helped to verify the accuracy of the proposed proprioceptive state estimation approach, with the results showing the state-of-the-art accuracies.

Authors' Infromation

Ning Guo is currently pursuing his Ph.D. degree with the Department of Mechanical and Energy Engineering, Southern University of Science and Technology, Shenzhen, China. His research interests include soft robotics and robot learning.

Xudong Han is currently pursuing his Ph.D. degree with the Department of Mechanical and Energy Engineering, Southern University of Science and Technology, Shenzhen, China. His research interests include soft robotics and robot learning.

Shuqiao Zhong is currently pursuing his Ph.D. degree with the Department of Ocean Science and Engineering at the Southern University of Science and Technology, Shenzhen, China. His research interests include underwater grasping and gripper design.

Zhiyuan Zhou is a research associate professor at the Department of Ocean Science and Engineering at SUSTech. His research interests include marine geophysics and ocean artificial intelligence.

Jian Lin is currently a chair professor of the Department of Ocean Science and Engineering at the SUSTech. His research interests include marine geophysics and ocean technology.

Jian S. Dai is a Chair Professor with the Department of Mechanical and Energy Engineering and the director of the SUSTech Institute of Robotics, Southern University of Science and Technology, Shenzhen, China. His research interests include theoretical and computational kinematics, reconfigurable mechanisms, dexterous manipulators, end effectors, and multi-fingered hands. Prof. Dai is a Fellow of the Royal Academy of Engineering, the American Society of Mechanical Engineers (ASME), and the Institution of Mechanical Engineers. He received the Mechanisms and Robotics Award, given annually by the ASME Mechanisms and Robotics Committee to engineers known for a lifelong contribution to the mechanism design or theory field, as the 27th recipient since 1974. He also received the 2020 ASME Machine Design Award, the 58th recipient since the Award was established in 1958. He was the recipient of several journal and conference best paper awards. He is the Editor-in-Chief for Robotica, an Associate Editor for the ASME Transactions: Journal of Mechanisms and Robotics, and a Subject Editor for Mechanism and Machine Theory.

Fang Wan is now an Assistant Professor at the School of Design at the Southern University of Science and Technology, Shenzhen, China. Her research has intersected with applied mathematical tools and data-driven design technologies.

Chaoyang Song is currently an Assistant Professor at the Department of Mechanical and Energy Engineering and an Adjunct Assistant Professor at the Department of Computer Science and Engineering at Southern University of Science and Technology, Shenzhen, China. His research interests include the design science of bionic robotics and robot learning.