A paper titled “RMSC-VIO: Robust Multi-Stereoscopic Visual-Inertial Odometry for Local Visually Challenging Scenarios,” authored by Professor Zhang Tong from the Institute of Unmanned Systems Technology at Northwestern Polytechnical University, has been accepted by IEEE Robotics and Automation Letters (RA-L).

Citation: T. Zhang, J. Xu, H. Shen, R. Yang and T. Yang, "RMSC-VIO: Robust Multi-Stereoscopic Visual-Inertial Odometry for Local Visually Challenging Scenarios," in IEEE Robotics and Automation Letters, vol. 9, no. 5, pp. 4130-4137, May 2024, doi: 10.1109/LRA.2024.3377008.

Accurate self-positioning is fundamental for robots to achieve autonomy. While some visual-inertial odometry (VIO) algorithms have already achieved high precision and stable state estimation on publicly available datasets, their capabilities are limited in visually challenging environments due to reliance on a single monocular or stereo camera. Meanwhile, introducing additional sensors or using multi-camera VIO algorithms significantly increases computational demands.

Summary of the study

1. A multi-stereoscopic VIO system is proposed, capable of integrating multiple stereo cameras and exhibiting excellent robustness in visually challenging scenarios.

2. An adaptive feature selection method is proposed, which iteratively updates the state information of visual features, filters high-quality image feature points, and reduces the computational burden of multi-camera systems.

3. An adaptive tight coupled optimization method is proposed, which allocates optimization weights based on the quality of different image feature points, effectively improving the positioning accuracy of the system.

4. Comprehensive and extensive experimental evaluations were performed in a variety of challenging scenarios to verify the robustness and effectiveness of the method. The datasets used in these experiments have been publicly released by Professor Zhang’s team for future research and development.

System introduction

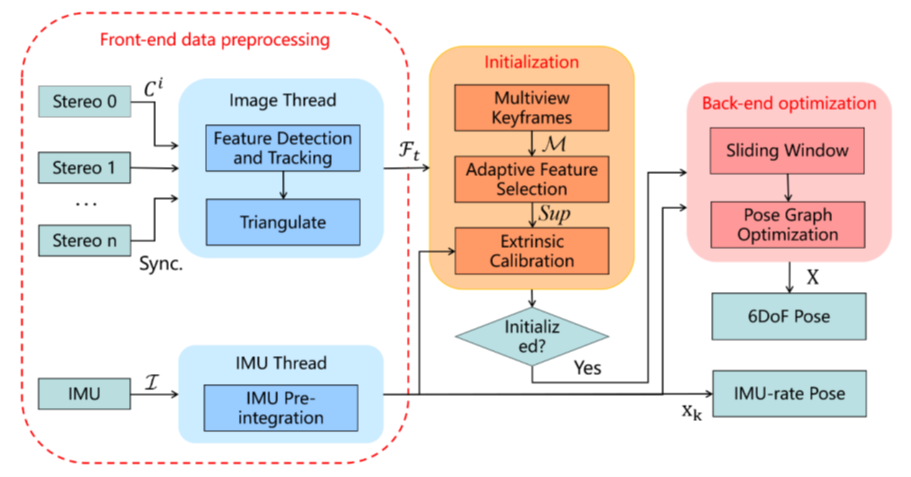

Figure 1: Flow chart of the RMSC-VIO system framework

Front-end feature processing

In the multi-stereo VIO system, feature points are extracted and matched from each stereo camera’s image to obtain environmental information in different directions for the robot.

Initialization

The study introduces the concept of multi-view keyframes (MKF) and proposes an adaptive feature selection method (AFS):

1) Select multi-view keyframes based on a calculation of parallax between the previous frames and the quality of feature point tracking.

2) When visual information in a particular direction is not available, AFS selects an accessible set of alternative feature points to initialize the pose of the multi-view keyframe. Conversely, in scenarios where all visual information is accessible, AFS strategically selects a set of high-quality feature points for subsequent back-end optimization processing. Algorithm 1 provides the pseudocode for the proposed AFS algorithm.

3) To simplify the calibration of multiple cameras, an online external calibration method for multi-camera systems is adopted.

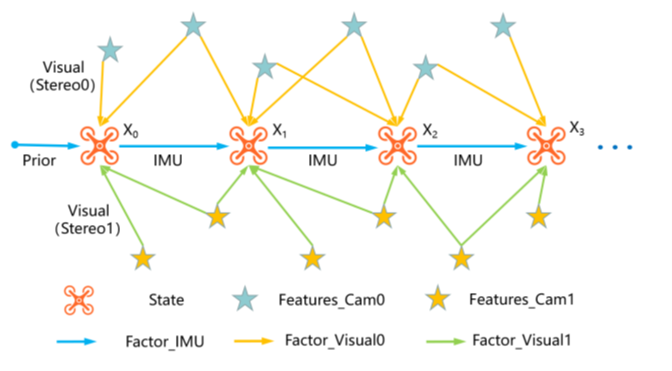

Tightly coupled multi-stereo VIO

The best feature point sets from all cameras, referred to as the “Sup” set, are integrated into the back end for joint optimization. This fusion method avoids complex and redundant VIO fusion calculations, reduces inconsistencies between multiple VIO results, and simultaneously integrates visual information from different angles into the optimization process.

Fig. 2: Structure of the sliding window factor diagram

Multi-stereo visual loop closure

Multi-view loop closure detection is used to reduce drift in most sequences.

The research team collected data from three indoor and four outdoor scenarios. For indoor experiments, the NOKOV motion capture system was used to obtain true ground positioning with sub-millimeter accuracy. For outdoor sequences, Real-Time Kinematic (RTK) technology provided true ground positioning with centimeter-level accuracy.

By conducting comparative analyses with state-of-the-art algorithms (ORB-SLAM3, VINS-Fusion, MCVIO), ablation experiments, and drone verification, the system's effectiveness and robustness in challenging visual environments were validated.

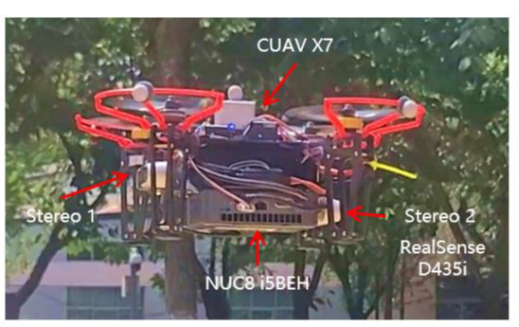

Fig. 3: Quadcopter UAV used in the experiment

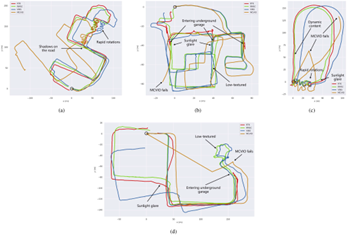

1. In qualitative analysis, the RMSC-VIO algorithm proposed by this study demonstrates excellent trajectory performance on all datasets.

Fig. 4: Top view of four dataset sequences comparing the estimated trajectories of VINS-Fusion, MCVIO, and RMSC-VIO to the true position. A black circle indicates the starting point of each trajectory.

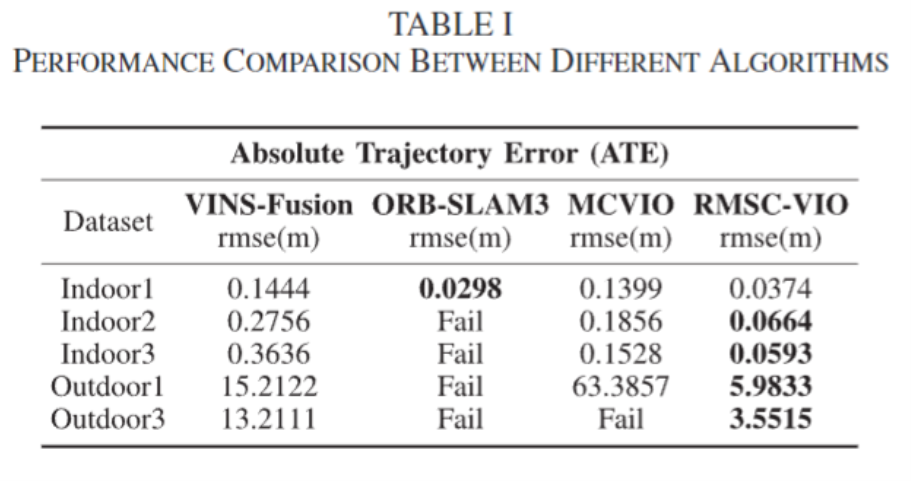

2. In quantitative analysis, compared to VINS-Fusion, the root mean square error (RMSE) measured by absolute trajectory error (ATE) was reduced by 60% to 80%. Additionally, the RMSC-VIO method appeared more effective than MCVIO, reducing ATE RMSE by 60% to 90%.

Table 1: Absolute trajectory error (ATE) of different algorithms from the true ground position data

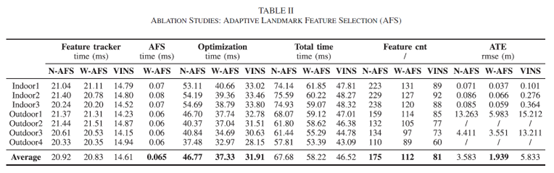

3. The effectiveness of the AFS method was assessed by comparing its computational cost and impact on positioning accuracy throughout the VIO process. The results demonstrated that the AFS method achieves high-quality positioning accuracy with relatively low computational demands.

Table 2: Summary of the performance of N-AFS (RMSC-VIO without AFS), W-AFS (RMSC-VIO with AFS), and VINS-Fusion on seven datasets

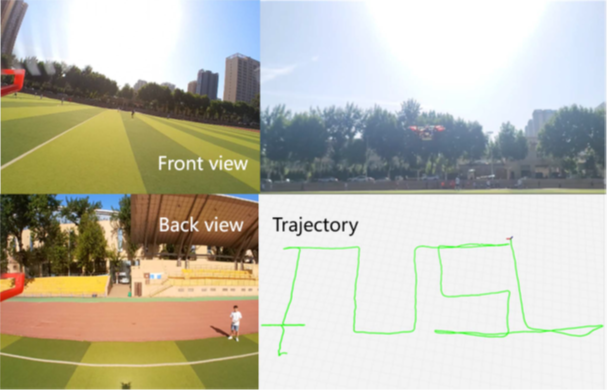

4. Real flight experiments were conducted by deploying the proposed algorithm on a quadcopter to demonstrate its practicality and effectiveness in local visually challenging scenarios.

Fig.5: Real flight tests of the proposed algorithm deployed on a quadcopter

In this study, three indoor scenarios were captured using the NOKOV metric motion capture system. Reflective marker points were pasted on the fuselage of the quadcopter UAV to obtain true ground position data with sub-millimeter accuracy.

Zhang Tong: Associate Researcher at the Institute of Unmanned Systems Technology, Northwestern Polytechnical University, and at the Key Laboratory of Intelligent Unmanned Aerial Vehicles, Northwestern Polytechnical University. He is a master's supervisor with primary research interests in autonomous perception and collaborative planning technologies for unmanned systems.

Xu Jianyu: Master's student at the Institute of Unmanned Systems Technology and the Key Laboratory of Intelligent Unmanned Aerial Vehicles, Northwestern Polytechnical University. His main research focus is autonomous positioning and visual SLAM for unmanned systems.

Shen Hao: Master's student at the Institute of Unmanned Systems Technology and the Key Laboratory of Intelligent Unmanned Aerial Vehicles, Northwestern Polytechnical University. His research interests include collaborative planning technologies for unmanned systems.

Yang Tao: Associate Professor at the Institute of Unmanned Systems Technology, Northwestern Polytechnical University, and at the Key Laboratory of Intelligent Unmanned Aerial Vehicles, Northwestern Polytechnical University. He is a master's supervisor with primary research interests in multi-source fusion perception and navigation.