A significant challenge lies in how cable-driven parallel robots navigate and avoid dynamic obstacles in confined spaces.

A research team from Harbin Institute of Technology (Shenzhen) conducted an interesting study, with the paper titled “Dynamic Obstacle Avoidance for Cable-Driven Parallel Robots with Mobile Bases via Sim-to-Real Reinforcement Learning” published in the SCI&EI-indexed journal IEEE Robotics and Automation Letters.

Research team will present the related research results at the 2024 International Conference on Intelligent Robots and Systems (ICRA).

Figure 1: Research abstract.

Research Background

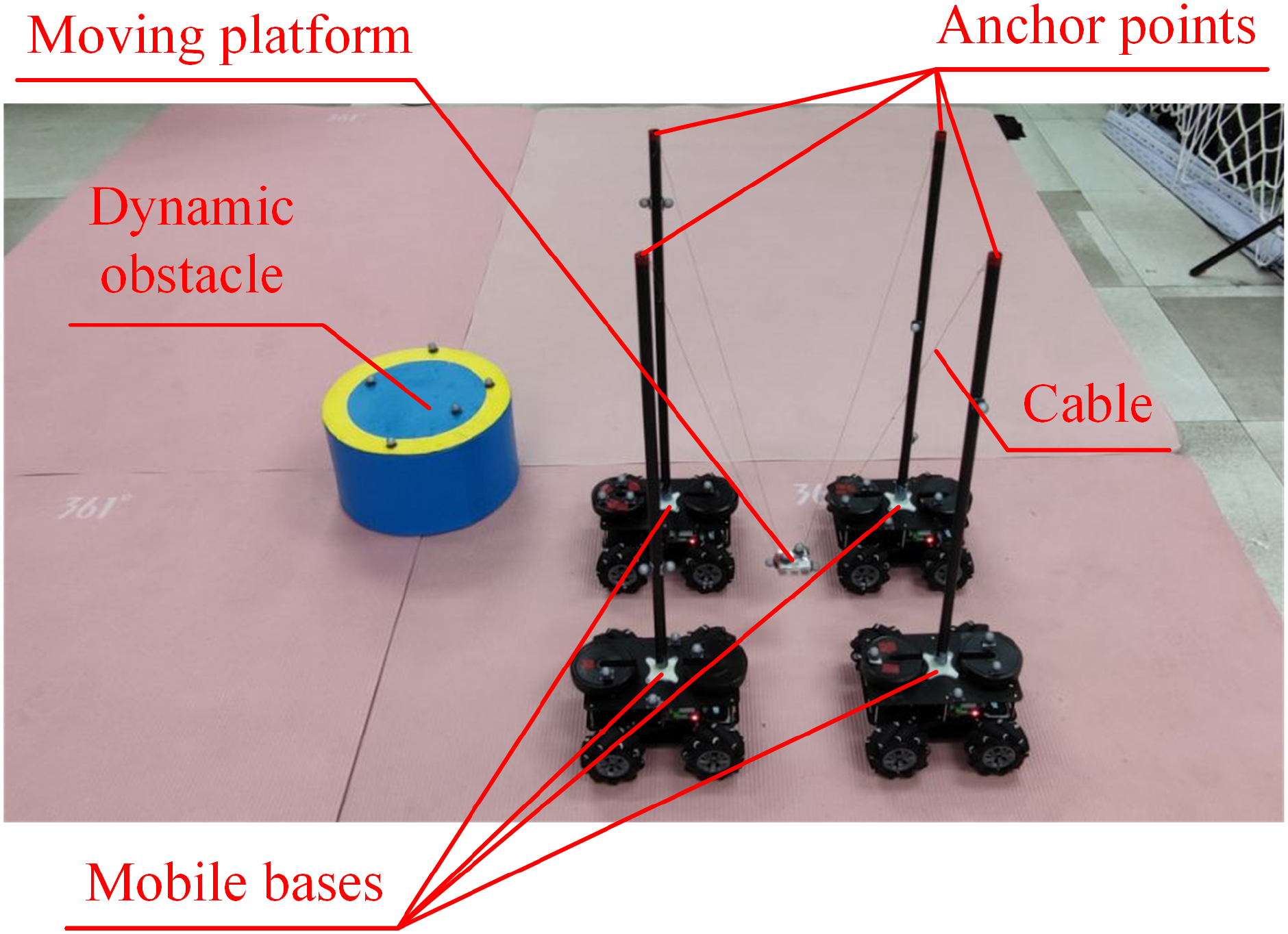

Cable-Driven Parallel Robots (CDPRs) are a new type of parallel robot that uses cables instead of rigid links to control the position of an end effector.

These robots have simple structures, low inertia, large working areas, and good dynamic performance. They are highly suitable for applications in equipment manufacturing, medical rehabilitation, aerospace, and other fields. Due to their ability to change geometric configurations, they are particularly well-suited for tasks in constrained environments.

Figure 2: Rope traction parallel robot with 4 moving bases.

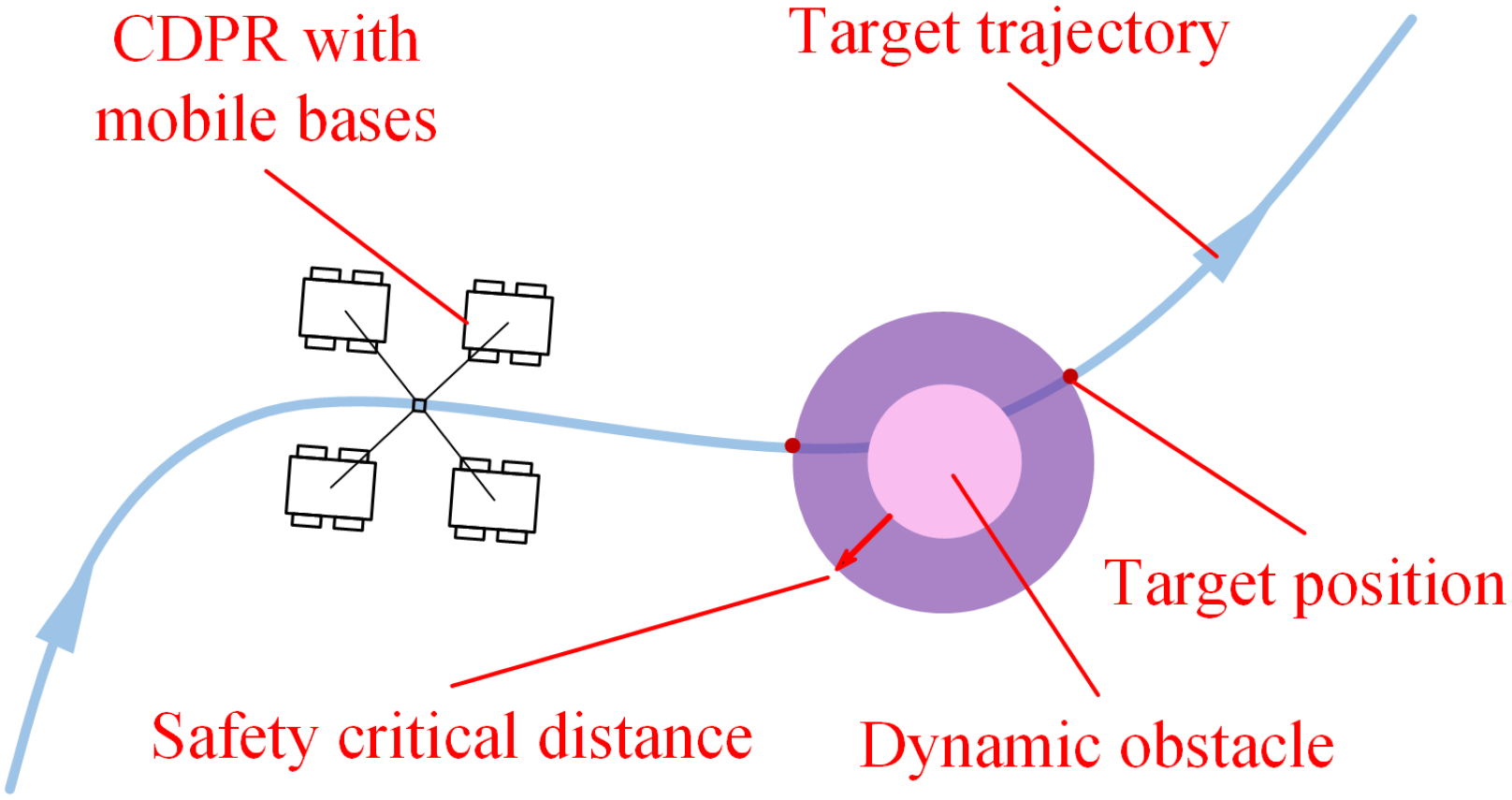

In constrained environments, CDPRs may encounter dynamic obstacles that are not considered by trajectory planning methods, necessitating real-time avoidance actions to bypass or traverse obstacles. Due to the high-dimensional state space and constraints arising from multiple cables and a mobile base, this is a challenging task.

This research addresses this issue by proposing an algorithm capable of enabling CDPRs to dynamically evade obstacles, avoiding collisions and returning to the target trajectory as needed.

Figure 3: The CDPR may encounter dynamic obstacles while planning its trajectory.

Obstacle Avoidance Algorithm

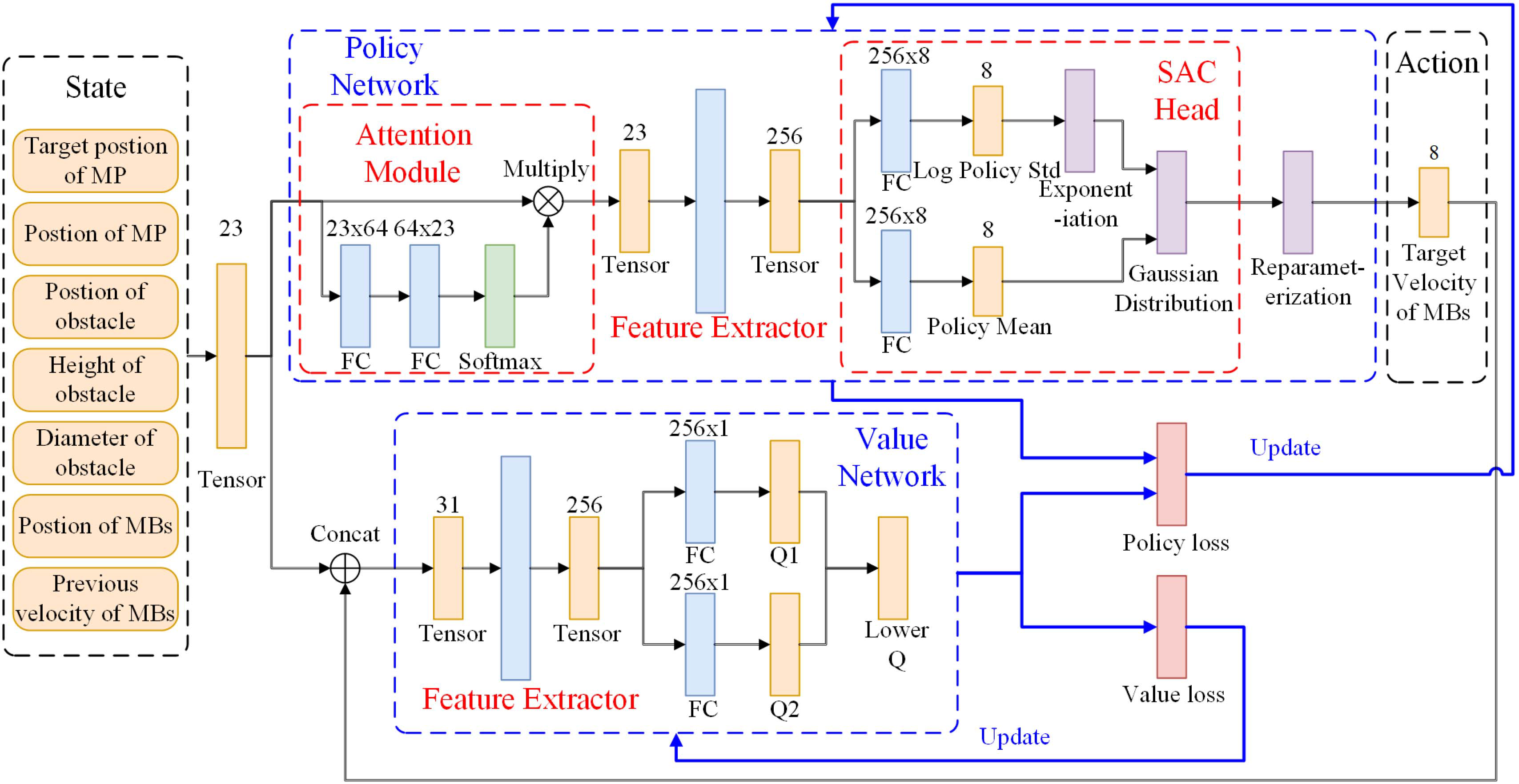

The study proposes an obstacle avoidance controller (OAC) based on reinforcement learning (RL) and integrates it into the trajectory tracking controller (TTC). The OAC design is based on the Soft Actor Critic (SAC) algorithm and an attention module to address real-time obstacle avoidance problems for CPDRs with fixed-length cables connected to a moving base.

This method can handle multiple constraints and high-dimensional state spaces of CDPRs, achieving dynamic obstacle avoidance in real-time dynamic obstacle environments.

Figure 4: Obstacle avoidance controller based on SAC algorithm.

The RL-based OAC was trained in the Mujoco simulator using two training strategies: two-stage training and single-stage training.

For the two-stage training strategy, the OAC converges within 50,000 cycles, with a training time of approximately 35 minutes. With the single-stage training strategy, the OAC converges within 500,000 cycles, taking about 5.5 hours for training. Both OACs ultimately achieve nearly identical results. The research indicates that the two-stage training strategy, which utilizes reward shaping techniques, can expedite the training of the OAC.

Real-world experiments

A trained RL-based OAC algorithm was tested in a real-world environment.

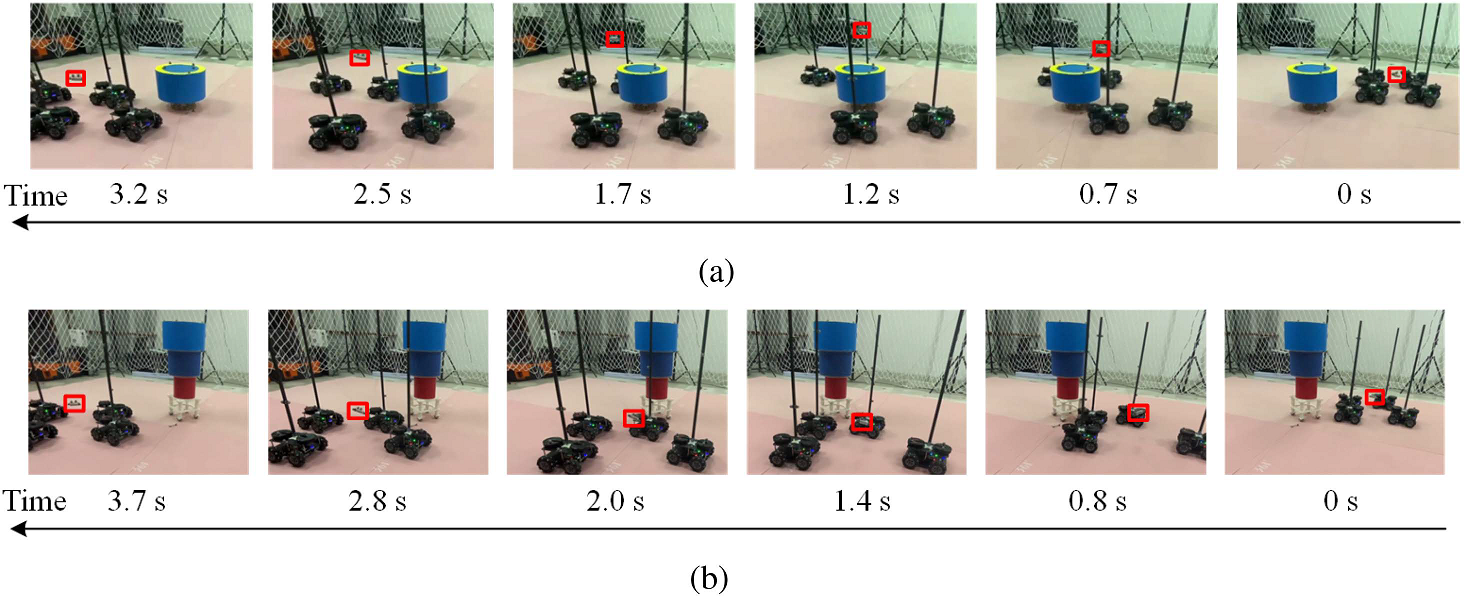

The experimental setup consisted of a cable-driven parallel robot (CDPR) with four mobile bases connected by four fixed-length cables. Two types of obstacles were used: low obstacles with a height of 0.32 meters and high obstacles with a height of 0.92 meters.

The mobile platform of the CDPR was able to pass over shorter obstacles but was forced to navigate around taller obstacles to avoid collisions.

During the experiment, the NOKOV motion capture system was deployed to capture the position and orientation of the cables, the mobile bases, and the dynamic obstacles in real time.

Figure 5: Evasive maneuvers performed by the CPDR when encountering obstacles of different heights.

The RL-based OAC method successfully guided the CDPR to employ different avoidance strategies to navigate over or around obstacles of different heights.

Bibliography: