Unmanned vehicles are intelligent autonomous vehicles that may perform path planning and environment perception and have become a popular focus in the research of intelligent vehicles. Unmanned vehicles may identify their surroundings and their own conditions through onboard sensors and perform navigation and positioning calculations to plan paths towards specific targets.

In different conditions, a single navigation sensor is unable to provide accurate data for high-precision position navigation. As such, a variety of sensors are installed on unmanned vehicles. Currently, the most used sensors are inertial measurement units (IMUs), ultra-wideband (UWB), and wheel odometers.

To make the unmanned vehicle system more adaptive and reliable, researchers at the Harbin Institute of Technology studied a multi-sensor navigation system and aimed to solve the problem of asynchronous data acquisition in the case of sensor failure. The researchers abstracted sensor information into factors and used the factor graph model to establish a multi-sensor framework, then used an incremental smoothing and mapping (iSAM2) optimization algorithm based on the Bayesian tree to process and synchronize sensor information. This method ensures that the accuracy is close to the least squares method while retaining computational efficiency, which may greatly improve the robustness and reliability of the navigation system.

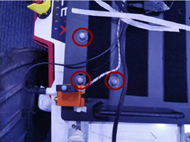

The study focused on integrating IMU, UWB, and odometer data in an indoor environment with the unmanned vehicle moving at a relatively low speed. To verify the performance of the navigation algorithm, the researchers built a multi-sensor platform, featuring a Scout 2.0 mobile quad equipped with a MTi-G-700 (IMU), a LinkTrack S (UWB), and an odometer (ODOM) for its onboard sensors. The platform ran on an Ubuntu system and used Robot Operation System (ROS) for synchronized data acquisition.

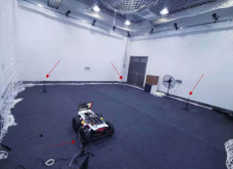

Figure 1 UWB base station layout location

Figure 2 IMU, UWB mounting location

To obtain the actual position of the platform, the researchers used the NOKOV motion capture system. Three reflective identification points were attached to the platform and were tracked by 16 Mars optical lenses placed above the 5m × 5m testing field. Because the positioning accuracy of the NOKOV motion capture system reaches the sub-millimeter level, it provided reliable information for the true position and movement trajectory of the trolley.

Figure 3 NOKOV motion capture system

Fig. 4 Reflective marker placement

To analyze the performance of the navigation system, the experiment compared the IMU+UWB+ODOM data with the actual parameters recorded by the motion capture system, then compared this data again with the data obtained by a single sensor.

The researchers concluded that single sensors face certain limitations, while the multi-sensor algorithm makes a drastic improvement over the performance of a single-sensor system. They also analyzed the efficiency and robustness of the multi-sensor system, proving that the method greatly improved the computational efficiency and robustness of the navigation system, which was also able to obtain more accurate positioning information than previously possible in the case of sensor failure.

Bibliography:

Shen Hebing. Research on multi-source sensor information fusion navigation technology of unmanned vehicle [D]. Harbin Institute of Technology, 2021.DOI:10.27061/d.cnki.ghgdu.2021.004020.