RSS 2024 Oral Presentation: Date: July 17; Location: Delft, Netherlands; Paper title: Event-based Visual Inertial Velometer; Authors: Lu Xiuyuan*, Zhou Yi*, Niu Junkai, Zhong Sheng, Shen Shaojie (*co-author).

Professor Zhou Yi’s Interview

This paper proposes a map-free visual-inertial odometry system based on event cameras and inertial measurement units (IMUs), obtaining an estimate of real-time linear velocity for UAVs by integrating heterogeneous data. This method addresses the issue of camera tracking failure during intense self-motion. Real-world experiments utilize the NOKOV motion capture system to monitor the pose of the event camera in real-time, validating the accuracy of the linear velocity estimation provided by the proposed method.

Background Information

Neuromorphic event cameras, with asynchronous pixels and high temporal resolution, are ideal for state estimation during high-speed motion. However, existing event-based visual odometry systems fail to track the camera position due to slow local map updates. The main challenge lies in the lack of effective data association methods that do not rely on environmental assumptions. Since the data from event cameras depend on motion, this problem is difficult to address with traditional vision techniques.

Research Overview

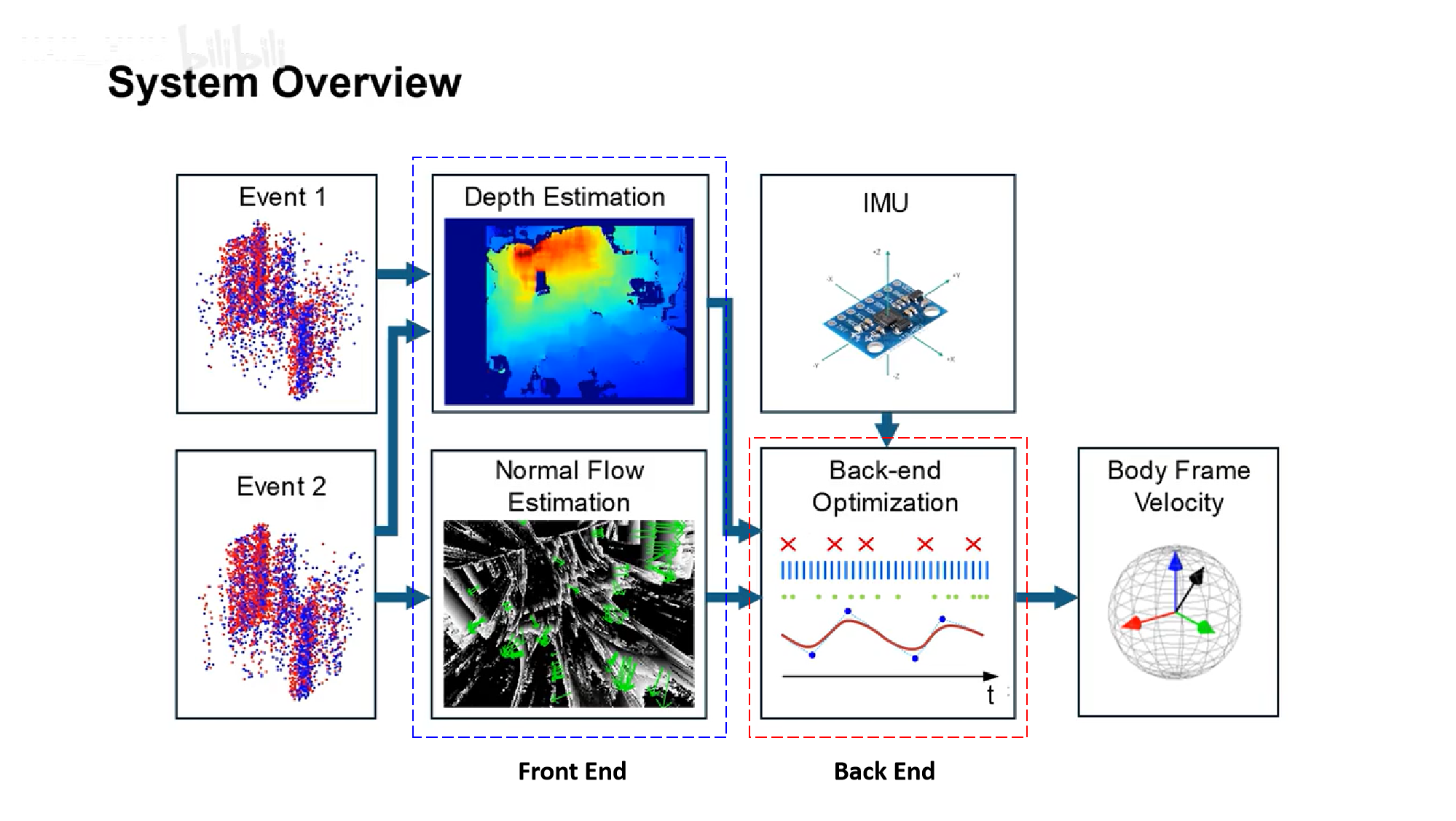

Workflow of the proposed event-based visual-inertial velometer system proposed in this paper.

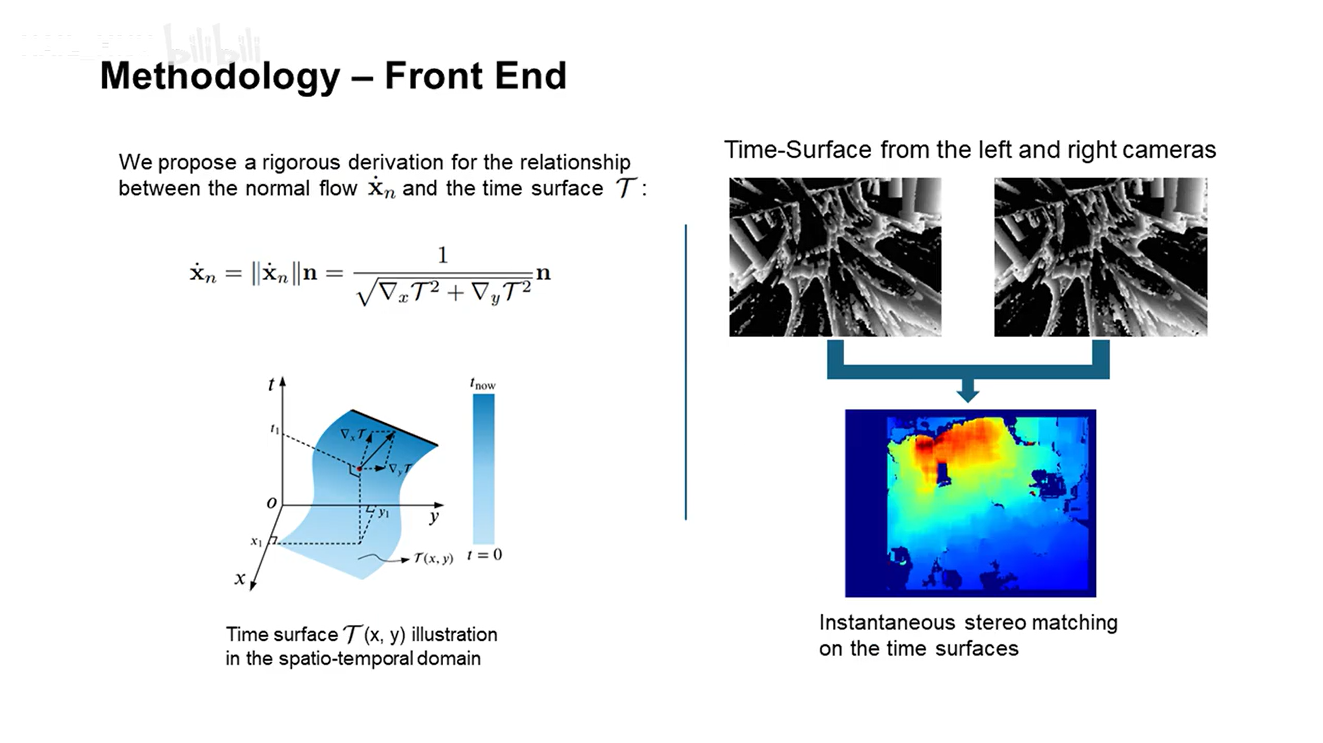

1. The front end calculates the normal flow and depth from the input events separately. This paper derives the calculation of normal flow strictly from the spatio-temporal gradients of the event data. Depth estimation is applied using an instant stereo matching method.

2. The back end handles the asynchronous and high data rate characteristics of the event camera. This paper proposes a continuous-time estimation of linear velocity, which can handle asynchronous event measurements and establish data association with temporally misaligned accelerometer measurements.

Experiments and Results

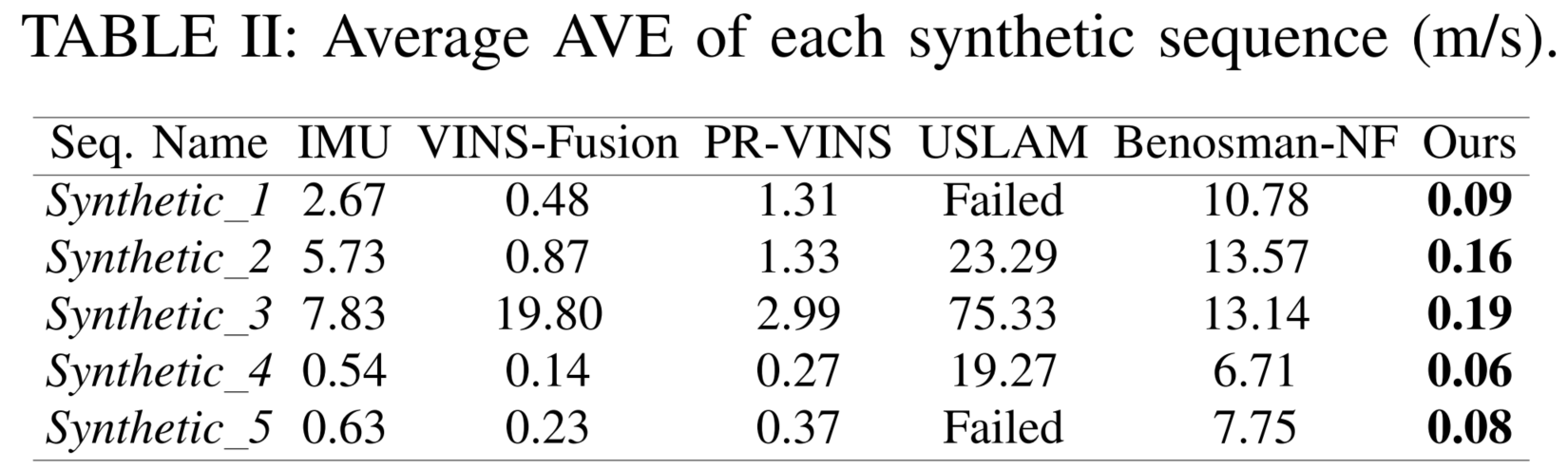

1. Simulation Experiments: To verify the effectiveness of the proposed method, the authors used the ESIM simulator to generate multiple sequences of intense drone flight maneuvers. Various methods were used to estimate linear velocity; Absolute Velocity Error (AVE) and Relative Velocity Error (RVE) were used as evaluation metrics.

Experimental results show that the proposed method has the best performance in both AVE and RVE.

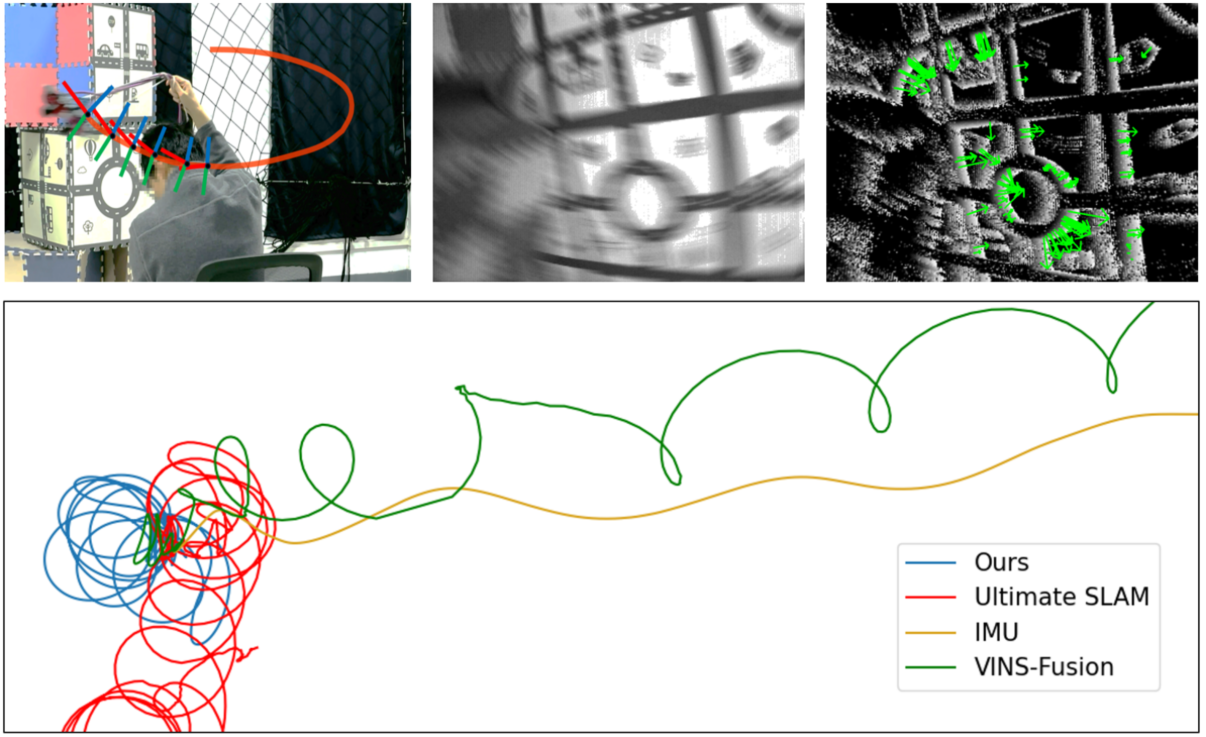

2. Real-world Experiments: The authors simulated intense motion by fixing a stereoscopic event camera to a rope and rotating it. The real-world experiments were conducted in a 9m × 6m × 3.5m room. The NOKOV optical motion capture system provided precise positioning of the event camera, collecting data in six degrees of freedom at a frequency of 200Hz and sub-millimeter accuracy. This data was used to validate the accuracy of the proposed method.

Comparison of trajectory results obtained by integration.

Experimental results indicate that the proposed method also exhibits low AVE on real data, verifying its effectiveness under real-world conditions.

Summary

The event-based visual-inertial odometry system proposed in this paper demonstrates superior performance in both simulated and real-world datasets across multiple sequences, particularly when handling intense motion and complex dynamic scenes.Experimental results prove the method's advantages in real-time capability, accuracy, and robustness.

The sub-millimeter ground truth data provided by the NOKOV measurement and motion capture system serves as a benchmark to verify the accuracy of the proposed method's linear velocity estimation.

Authors' profile:

Zhou Yi: Professor and Ph.D. advisor at the School of Robotics, Hunan University; Director of the NAIL Laboratory, Hunan University. Main research areas: Robot vision navigation (SLAM) and perception technology.

Lu Xiuyan: Ph.D. student at the Hong Kong University of Science and Technology, visiting Ph.D. student at the NAIL Laboratory, Hunan University. Research focus: Event-inertial perception and navigation systems.

Niu Junkai: Ph.D. student at the NAIL Laboratory, Hunan University. Research focus: SLAM.

Zhong Sheng: Ph.D. student at the NAIL Laboratory, Hunan University. Research focus: SLAM.

Shen Shaojie: Associate Professor at the Department of Electronic and Computer Engineering, Hong Kong University of Science and Technology; Director of the HKUST-DJI Joint Innovation Lab. Main research areas: Robotics and drones, state estimation, sensor fusion, localization and mapping, and autonomous navigation in complex environments.

Reference:

https://arxiv.org/html/2311.18189v2