The study explores human-robot hug interactions, proposing a HUG taxonomy of 16 types derived from human demonstrations using the NOKOV motion capture system. This taxonomy simplifies robotic hug classifications and enhances humanoids ability to mimic natural hugging behaviors for better user experience.

Citation

Yan, Zheng, et al. "A HUG taxonomy of humans with potential in human–robot hugs." Scientific Reports 14.1 (2024): 14212.

Research Background

Hugging is a vital social behavior that fosters emotional well-being and strengthens social bonds. However, modern lifestyles and an aging population have reduced opportunities for meaningful hugs, increasing loneliness and stress. Humanoid robots, increasingly integrated into social contexts, present a potential solution to this gap. However, existing robotic systems struggle to replicate the adaptability and complexity of human hugs. This study aims to address these challenges by proposing the HUG taxonomy, a systematic classification of human hugs. Combining NOKOV motion capture data with insights from psychology and robotics, the taxonomy enables robots to mimic human-like hugging behaviors effectively.

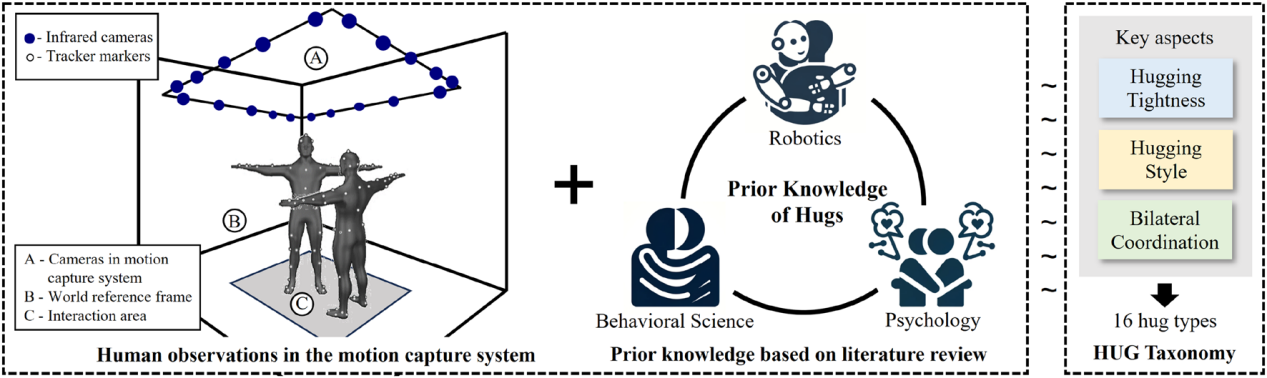

An overview of the proposal of the HUG taxonomy. HUG taxonomy (right) is proposed based on the human demonstration (left) and prior knowledge (middle).

Contributions

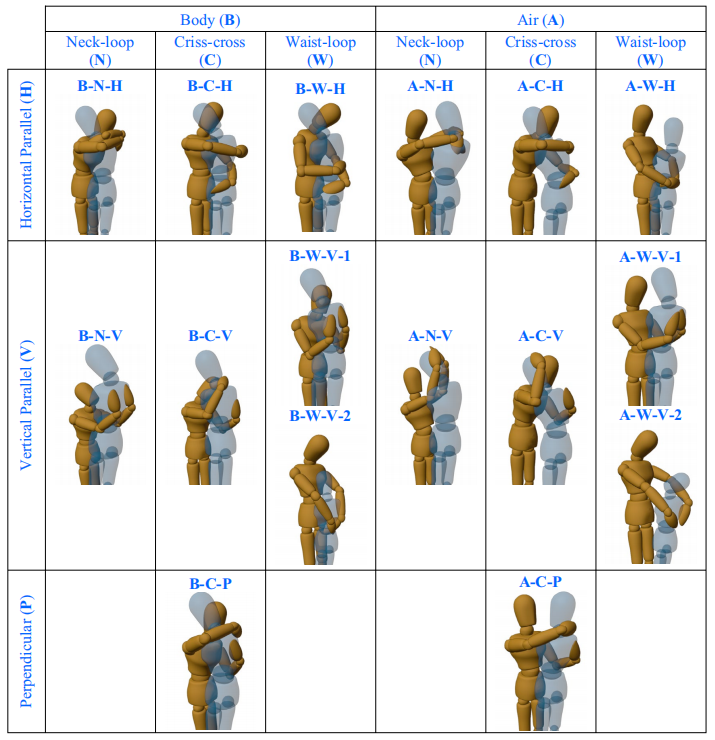

1. Development of a Comprehensive Taxonomy: The study proposes a novel HUG taxonomy based on three dimensions: hugging tightness (e.g., body vs. air hugs), style (e.g., criss-cross, neck-loop), and bilateral coordination (e.g., horizontal vs. vertical arm positioning). This taxonomy organizes the complexity of hugging behaviors into a structured framework.

2. Experimental Validation Using Motion Capture: Leveraging the NOKOV motion capture system , researchers recorded 379 hug demonstrations across scenarios such as social interactions and emotional expressions. This dataset validated the taxonomys completeness, distinguishing all observed hug types.

3.

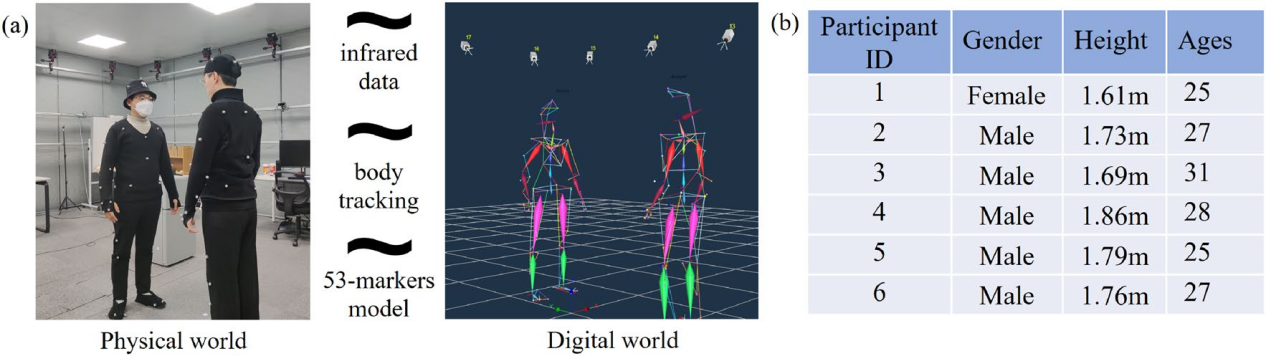

Motion capture experiments of the human demonstration. (a) Motion capture system. (b) Participant information.

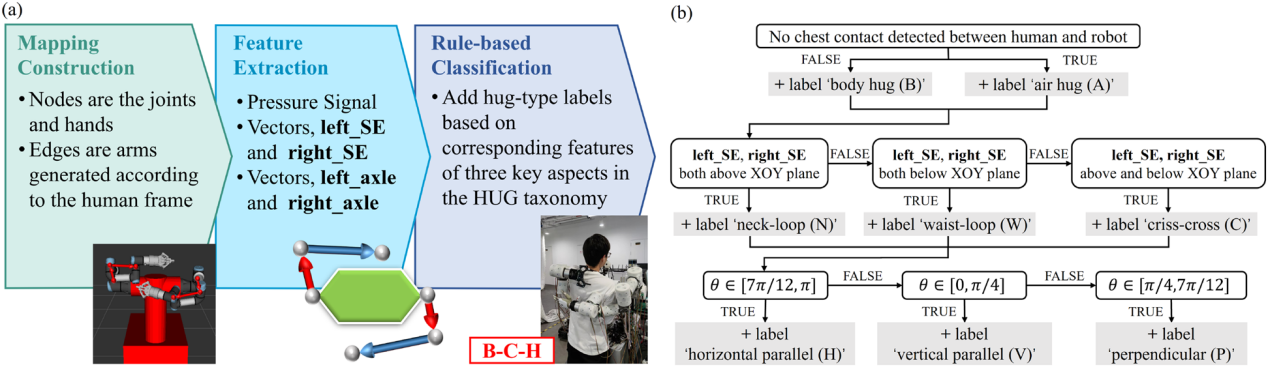

4. Integration with Robotic Systems: A rule-based classification system implemented the taxonomy in humanoid robots equipped with electronic skin (E-skin). The robots identified and performed specific hug types using pressure sensors and arm configurations .

Rule-based classification system. (a)The figure shows an example of the mapping with E-skinsignal (shown as a hexagon), as well as the detected hugging category as B-C-H hug. (b) Decision tree for distinguishing hug types.

4. Advancing Human–Robot Interaction: The taxonomy enhances robots ability to mimic human behaviors, fostering trust and comfort in social applications. It also informs the design of adaptive and context-sensitive control strategies for robotic hugs.

Experiments

The Human Demonstration section of the study focuses on analyzing human hugging behaviors using the NOKOV motion capture system. In a controlled environment equipped with 20 infrared cameras, participants wearing motion capture suits performed various hugging actions under different scenarios, such as social occasions, emotional expressions, and functional movements. The setup allowed for the collection of precise motion data, capturing 379 hug samples.

Each participant pair alternated roles as the initiator and receiver, ensuring diverse demonstrations. Scenarios were either freely chosen by participants or guided by prompts to reflect realistic hugging behaviors. The data revealed key physical properties of hugs, such as arm positions, alignment, and contact pressure. This information was instrumental in constructing the HUG taxonomy, which categorizes hugs based on tightness, style, and bilateral coordination. The demonstrations also served as the foundation for mapping human hugging patterns to robotic systems, enabling robots to replicate these behaviors with fidelity.

HUG taxonomy that all hug types are arranged in the matrix. Te pose of brown puppets (rather than blue puppets) is the concerned hug type. To clearly show the details of the hug types, the blue puppet is transparent with no arms. These photos are from the same view of the 3D model, which is motion capture data modeling results based on the Blender.

Conclusions

This study introduces the HUG taxonomy, which categorizes human hugs into 16 types based on tightness, style, and coordination. Validated using NOKOV motion capture system, the taxonomy bridges human-robot interaction gaps by enabling humanoid robots to replicate human-like hugs. Experimental results show that the taxonomy improves robots ability to execute adaptive and realistic hugging behaviors. Future research will focus on refining robotic sensing and control systems to support dynamic and context-aware interactions.

Author information

Yan Zheng^1,2,3, Wang Zhi Peng^2,3,4, Ren Ruo Chen^1,2,3, Wang Cheng Jin^1,2,3, Jiang Shuo^2,3,4, Zhou Yan Min^2,3,4, He Bin^2,3,4

1 Shanghai Research Institute for Intelligent Autonomous Systems, Tongji University, Shanghai 201210,

China.

2 State Key Laboratory of Intelligent Autonomous Systems, Shanghai 201210, China.

3 Frontiers Science Center for Intelligent Autonomous Systems, Shanghai 201210, China.

4 College of Electronics & Information Engineering, Tongji University, Shanghai 201804, China.

Yan Zheng - Ph.D. candidate in Intelligent Science and Technology, focusing on humanoid robot human-like natural interaction.

Wang Zhipeng - Researcher, doctoral supervisor, with main research directions in multimodal perception-based robot dexterous manipulation, legged robot autonomous walking in unstructured environments, and soft robot intelligent design and modeling.

Ren Ruochen - Ph.D. candidate in Intelligent Science and Technology, focusing on multimodal imitation learning for robots.

Wang Chengjin - Ph.D. candidate in Intelligent Science and Technology, with main research directions in pattern recognition and embodied intelligence.

Jiang Shuo - Associate Professor, doctoral supervisor, supported by the Shanghai Youth Science and Technology Talent Sailing Program, with main research directions in multimodal human-robot interaction perception and embodied intelligence.

Zhou Yanmin - Associate Professor, doctoral supervisor, supported by the Shanghai Youth Science and Technology Talent Sailing Program, with main research directions in biomimetic flexible perception for robots, active interaction with the environment, and cognition.

He Bin - Tenured Professor, recipient of the National Natural Science Foundation Outstanding Youth Fund, Executive Director of the Shanghai Autonomous Intelligent Unmanned System Science Center, Deputy Director of the National Key Laboratory of Autonomous Intelligent Unmanned Systems. Main research directions include intelligent robotics, intelligent perception, intelligent detection, unmanned systems, and digital twins.