A research team from Peking University and Dalian Maritime University jointly designed a deep learning-assisted biomimetic underwater triboelectric whisker sensor (UTWS) for passive perception of various hydrodynamic flow fields. This paper was published in the high-impact journal Nano Energy (Impact Factor: 16.8) with the title “Deep-learning-assisted triboelectric whisker for near field perception and online state estimation of underwater vehicle.”

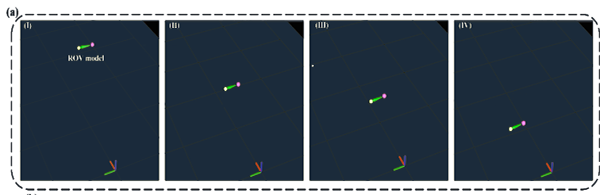

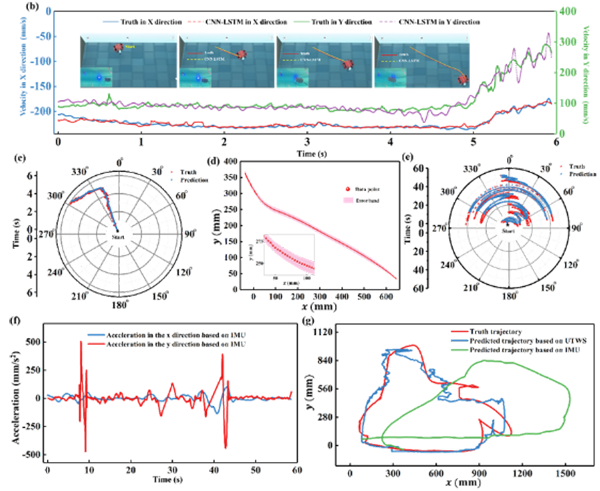

The authors of this paper used the NOKOV motion capture system to support the deep learning-assisted biomimetic whisker sensor in achieving near-field perception and online state estimation for underwater vehicles. Using real-time motion data of the vehicle (speed and acceleration) captured by the underwater motion capture system, they created a dataset that links the vehicles motion states with the electrical signals from the whisker sensor. Through deep learning analysis of multi-channel signals, the study elucidated the high-order mapping mechanism between the whisker sensor and the robots motion state, enabling real-time speed estimation for underwater vehicles. The designed underwater triboelectric whisker sensor (UTWS) can identify various characteristics of the 2D flow field, including flow velocity, angle of attack, and wake. This research not only marks a significant technological advancement but also provides a new approach to navigation and positioning for underwater intelligent devices.

Background:

Underwater sensing technology plays an important role in various fields, including underwater target detection and tracking systems and underwater vehicle swarm operation. Synchronous underwater sensing primarily involves optical (laser-based) and ultrasonic (sonar-based) technologies. However, both laser and ultrasonic methods are affected by numerous interference factors in underwater environments, resulting in reduced sensing range and accuracy. Additionally, sonar, as an active sensing method, faces challenges such as high energy consumption, complex structure, and susceptibility to detection. Consequently, many researchers are exploring alternative sensing technologies, including hydrodynamic sensing, to enhance the perceptual capabilities of underwater intelligent devices.

The tactile organs of marine organisms can accurately measure and identify their surrounding environment. Inspired by the sensory behaviors of marine life, researchers have designed various underwater biomimetic tactile sensors, demonstrating the potential of underwater biomimetic tactile sensing. However, tactile sensing devices still face challenges such as a low signal-to-noise ratio, low sensitivity, and poor adaptability. Triboelectric nanogenerators (TENGs) are an innovative electromechanical conversion method, with their primary advantage being the ability to convert noisy mechanical disturbances present in the environment into high-amplitude electrical signals. This study designs a biomimetic underwater triboelectric whisker sensor, capable of passively sensing various hydrodynamic flow fields, and uses a deep learning model to further enable online speed estimation for underwater vehicles.

Research Highlights:

1.The sensing unit employs a dual chamber shielding technology to minimize signal interference from ions in the water.

2.The UTWS demonstrates impressive advantages, with a fast response time of 21 ms, high sensitivity of 3.16 V/m·s⁻¹, and a signal-to-noise ratio of 61.66 dB.

3.An underwater vehicle equipped with the UTWS can accurately perform online speed estimation, with a root mean square error of approximately 0.093 in validation scenarios.

Test experiments

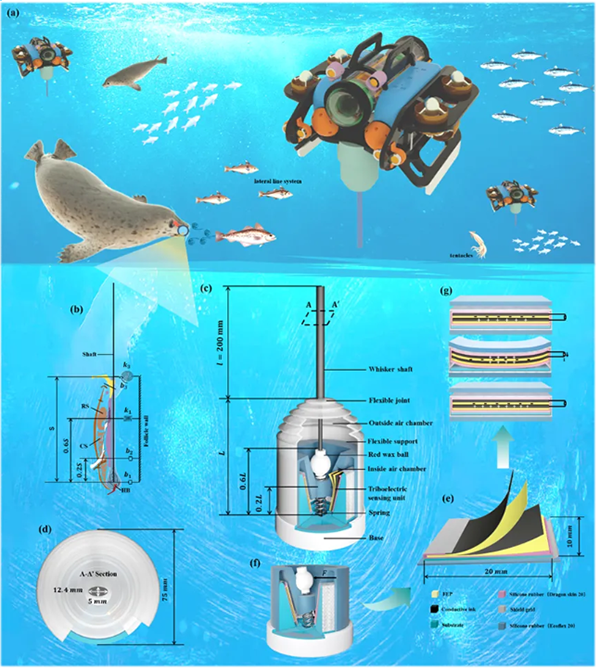

The bionic underwater triboelectric whisker sensor (UTWS) device primarily consists of an elliptical whisker shaft with an aspect ratio of 0.403, four flexible triboelectric sensing units emulating the neural structure of follicle-sinus complexes, and a flexible corrugated joint simulating the epidermis of marine animals cheeks.

Structure and Working Mechanism of the Underwater Triboelectric Whisker Sensor (UTWS). a. Application of UTWS in passive sensing of hydrodynamic flow fields. b. Neural structure of the follicle-sinus complex. c. Basic structure of the UTWS. d. Top view of the UTWS. e, f, g. Working mechanism of the UTWS, illustrating the deformation of the sensing units and electron flow in response to external stimuli.

In the design process, a dual chamber shielding technology was used to minimize interference from ions in the water. The UTWS demonstrated impressive advantages, including a fast response time of 21 ms and a high signal-to-noise ratio of 61.66 dB. By using deep learning analysis techniques to process multi-channel signals, underwater vehicles equipped with UTWS can achieve online speed estimation, with a root mean square error of approximately 0.093 in validation tests. These results show that the proposed deep learning-assisted sensing technology based on UTWS shows potential as an integrated tool for underwater vehicles in local navigation tasks.

Validation of UTWS in ROV Online State Estimation. a. Random turning motion data captured by NOKOV. b. Real-time speed estimation of the ROV. c. Angular velocity of the ROV along its turning motion trajectory. d. Error band of the predicted trajectory. e. Angular velocity of the ROV in circular motion trajectory. f. Acceleration based on the Inertial Measurement Unit (IMU). g. Comparison between the actual trajectory, UTWS-based predicted trajectory, and IMU-based predicted trajectory.

Inspired by the sensitive whiskers of harbor seals, this study designs a deep learning-assisted biomimetic underwater triboelectric whisker sensor (UTWS) for passively perceiving various hydrodynamic flow fields and real-time state estimation of underwater vehicles. The NOKOV motion capture system provides high-precision underwater vehicle positioning data.