Recently, Professor Wang Wei's team from the Biomedical Engineering Research Center at Chongqing University of Posts and Telecommunications published a paper titled “Effects of Dynamic IMU-to-Segment Misalignment Error on 3-DOF Knee Angle Estimation in Walking and Running” in the SCI-indexed journal SENSORS. The paper introduces a new algorithm for the dynamic alignment of inertial measurement units (IMUs) with limb segments.

Background

Motion capture technology plays a crucial role in fields including rehabilitation, competitive sports, human-computer interaction, and identity recognition. Obtaining accurate joint angle measurements is an important criterion in various applications.

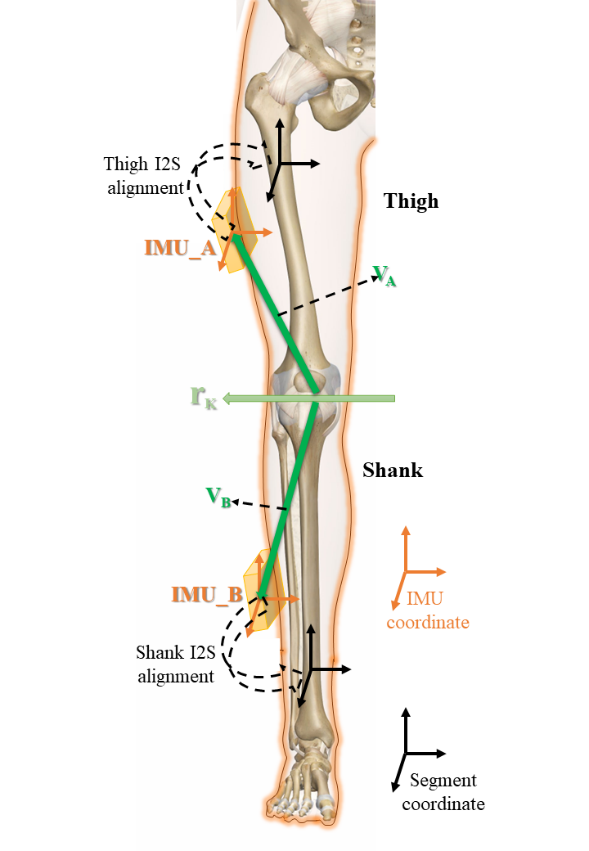

Using IMUs to acquire limb posture data involves transformations between the global coordinate system, sensor coordinate system, and limb coordinate system. The accuracy of the alignment between the IMU coordinate system and the limb coordinate system (IMU-to-Segment, I2S) determines the accuracy of the sensor output data as a representation of the motion of the attached limb. This makes the calibration process a crucial step in human joint angle computation.

Currently, methods for aligning IMUs with the body include manual alignment, pre-motion alignment, additional data fusion, and motion model alignment. Among them, dynamic alignment methods based on motion models do not require specialized personnel or additional equipment and can eliminate errors during motion processes.

Schematic diagram of IMU and limb coordinate alignment

Research Content

Based on a motion constraint model, the research team introduced a Discrete Particle Swarm Optimization (DPSO) algorithm with a cross factor to perform dynamic alignment. Utilizing dynamic alignment parameters, they computed three degrees of freedom for human joint angles based on quaternions.

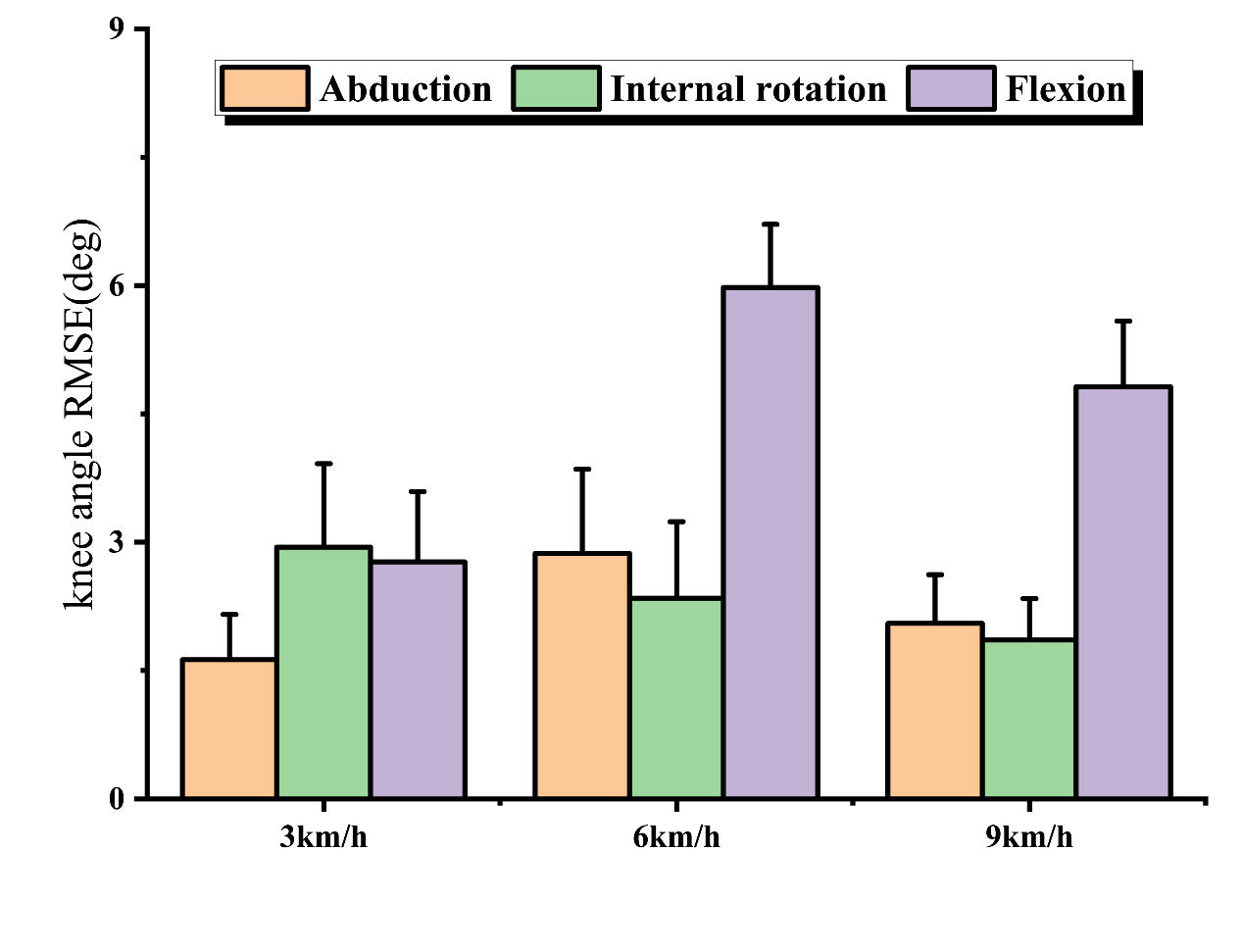

Furthermore, by intentionally introducing errors, the researchers investigated the impact of IMU misalignment on joint angle calculations during walking (3 km/h), light jogging (6 km/h), and normal running (9 km/h). They explored various kinematic differences underlying these effects.

Algorithm Validation

To validate the effectiveness of the joint angle computation algorithm, the research team utilized the NOKOV sub-millimeter optical motion capture system to obtain real limb posture information. Three non-coplanar markers were placed on the legs of the subjects to define rigid bodies and were tracked by optical motion capture cameras. The NOKOV motion capture software collected the rotational posture data for each limb segment and calculated the three degrees of freedom joint angles using motion capture data. These values were then compared with the joint angles based on IMU data.

The NOKOV motion capture system was used to obtain real limb posture information.

The results indicate that, in the three motion scenarios, the root mean square error (RMSE) of the joint angles ranges from 1.2 to 5.2 degrees. The joint angle computation algorithm shows good performance, and the misalignment errors between IMUs and limbs in different movements confirm the relevant kinematic differences in human motion.

Comparison of IMU joint angle data with optical motion capture data

Bibliography: