The team of Associate Professor Lei Xiaokang from the School of Information and Control Engineering at Xi'an University of Architecture and Technology, in collaboration with the team of Professor Peng Xingguang from the School of Marine Science and Technology at Northwestern Polytechnical University, has achieved efficient and flexible interactive control of human-swarm robots based on Virtual Reality (VR) and eye-tracking technology. The related research paper, "Human-Swarm Robots Interaction Approach Based on Virtual Reality and Eye Tracking," was published in Information and Control, a journal in the field of information and control science.

NOKOV motion capture system establishes a state interconnection between the real robot's motion status and its digital twin, enabling real-time updates of the robot's motion status in the VR scene, thereby enhancing the operator's immersion and the efficiency of perceiving the swarm's state.

Citation

XU Mingyu, LEI Xiaokang, DUAN Zhongxing, XIANG Yalun, DUAN Mengyuan, ZHENG Zhicheng, PENG Xingguang. Human-Swarm Robots Interaction Approach Based on Virtual Reality and Eye Tracking[J]. Information and Control, 2024, 53(2): 199-210. DOI: 10.13976/j.cnki.xk.2023.2548

Background

Swarm robots offer advantages such as high robustness, scalability, and parallel collaborative operation, providing unique benefits for executing large-scale, multi-objective tasks. Combining this with human decision-making intelligence can effectively improve the reliability, adaptability, and intelligence level of swarm robot systems. However, traditional Human-Swarm Interaction (HSI) methods relying on media such as gestures and voice face challenges including a large number of controlled entities and difficulty in perceiving the swarm's state, leading to issues like low efficiency and lack of flexibility. To address these problems and realize an efficient, convenient, and highly immersive HSI method, the research team conducted related research based on VR and eye-tracking technology.

Contributions

1. Proposes a novel HSI method integrating VR and eye tracking, characterized by strong immersion, flexibility, and convenience.

2. The VR-based interaction method can overcome limitations such as robots operating beyond the line of sight and adverse environmental conditions, while also providing feedback on the swarm's state, realizing a digital twin for the robot swarm.

Interaction Process

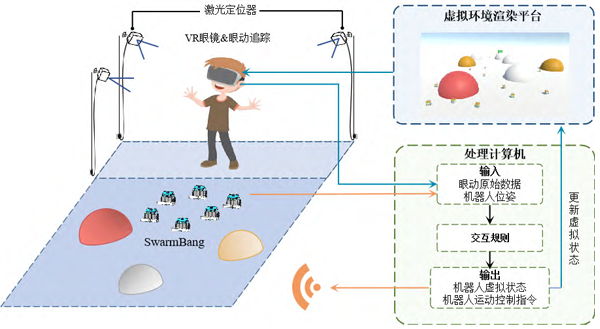

The proposed HSI method based on VR and eye tracking involves three stages: constructing the virtual scene, collecting and processing eye-tracking data, and sending and executing commands.

Constructing the Virtual Scene: Build a VR scene of the swarm robots and their environment, presented to the operator via VR glasses. The NOKOV motion capture system is used to achieve real-time synchronization between the real robots' motion states and their digital twins in the virtual environment.

Eye-tracking Data Collection and Processing: Eye-tracking sensors mounted on the VR glasses capture the operator's eye movement data in real-time and transmit it to a processing computer. After preprocessing and parsing, motion control commands are generated.

Command Transmission and Execution: The eye-tracking control commands are sent to the swarm robots, which receive and execute the corresponding actions.

The overall scheme of the human-swarm robots interaction system

Control Rules

Swarm Roaming Interaction Rule: Maps the up/down/left/right movements of the eyeball directly to the robot's movement direction.

Swarm Goal-oriented Tracking Interaction Rule: Locks onto a target via the eye-tracking ray, driving the robots to move towards the target.

Swarm Trajectory Tracking Interaction Rule: Guides the robot swarm to follow a preset path using the eye-tracking trajectory.

Experiments

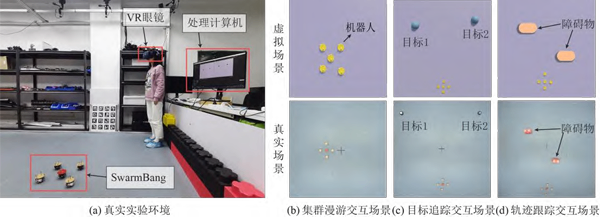

The research utilized SwarmBang robots to form a small-scale swarm for real-world HSI experiments to verify the feasibility and effectiveness of the proposed VR and eye-tracking-based HSI method.

Real experiment environment and VR interaction scene

Swarm Roaming Experiment: The operator controls the robots to move right, left, down, and up sequentially through eye movements. The robots respond promptly, and their movement trajectory is highly consistent with the eye-tracking commands.

Swarm Goal-oriented Tracking Experiment: The operator gazes at different targets, and the robots quickly switch and stably move towards the targets. The target activation sequence is 1→2→1→2→1 (left→right→left→right→left), with clear trajectories.

Swarm Trajectory Tracking Experiment: The operator presets a trajectory via eye movements. The robots move smoothly along the trajectory, successfully avoiding obstacles with precise tracking.

The experimental results demonstrate that the VR and eye-tracking-based HSI method achieves efficient, flexible, and highly immersive interactive control of human-swarm robots.

NOKOV motion capture system provided real-time trajectory data of the robots in the real environment for the experiments, establishing state interconnection between the real robots' motion status and their digital twins, thereby facilitating the implementation of eye-tracking control commands.

Authors’ Profile

XU Mingyu, Master's graduate from the School of Information and Control Engineering, Xi'an University of Architecture and Technology. Main research areas: Human-Swarm Robot Interaction, Virtual Reality Technology.

LEI Xiaokang (Corresponding Author), Associate Professor and Master's Supervisor at the School of Information and Control Engineering, Xi'an University of Architecture and Technology. Main research areas: Swarm Robotics and Swarm Intelligence.

DUAN Zhongxing, Executive Vice Dean of the International Education College and Professor at the School of Information and Control Engineering, Xi'an University of Architecture and Technology. Main research areas: Intelligent Systems and Intelligent Information Processing, Intelligent Detection and Machine Vision, Building Environment Control and Energy Saving Optimization, Embedded Technology and Intelligent Systems.

XIANG Yalun, Master's graduate from the School of Information and Control Engineering, Xi'an University of Architecture and Technology. Main research areas: Swarm Robotics.

DUAN Mengyuan, Master's graduate from the School of Information and Control Engineering, Xi'an University of Architecture and Technology. Main research areas: Visual Localization for Swarm Robots.

ZHENG Zhicheng, Ph.D. candidate at the School of Marine Science and Technology, Northwestern Polytechnical University. Main research areas: Swarm Intelligence, Swarm Robotics.

PENG Xingguang, Professor and Doctoral Supervisor at the School of Marine Science and Technology, Northwestern Polytechnical University. Main research areas: Swarm Intelligence, Evolutionary Computation, Machine Learning and their applications in unmanned systems, especially unmanned swarms.