Abstract

In 2025, Professor Yi Zhou from Hunan University has contributed as corresponding author on two papers published in the IEEE Transactions on Robotics (TRO), a top-tier robotics journal. These works demonstrate significant progress in event-camera-based state estimation and SLAM systems, with motion capture data validating the proposed methods in real high-speed scenarios.

Paper One

Event-based Visual-Inertial State Estimation for High-Speed Maneuvers

↑↑Click to watch the interview video with Professor Yi Zhou

RSS 2024 "Event-based Visual Inertial Velometer"

In 2024, Professor Yi Zhou's team published the paper "Event-based Visual Inertial Velometer" at the top robotics conference RSS. After a year, the research team further refined the algorithm and added more experimental validations in real high-speed scenarios, demonstrating the method's superior performance in terms of low latency and high accuracy. Subsequently, the paper "Event-Based Visual-Inertial State Estimation for High-Speed Maneuvers" was published in the top robotics journal IEEE TRO.

Citation

X. Lu, Y. Zhou, J. Mai, K. Dai, Y. Xu and S. Shen, "Event-Based Visual-Inertial State Estimation for High-Speed Maneuvers," in IEEE Transactions on Robotics, vol. 41, pp. 4439-4458, 2025, doi: 10.1109/TRO.2025.3584544.

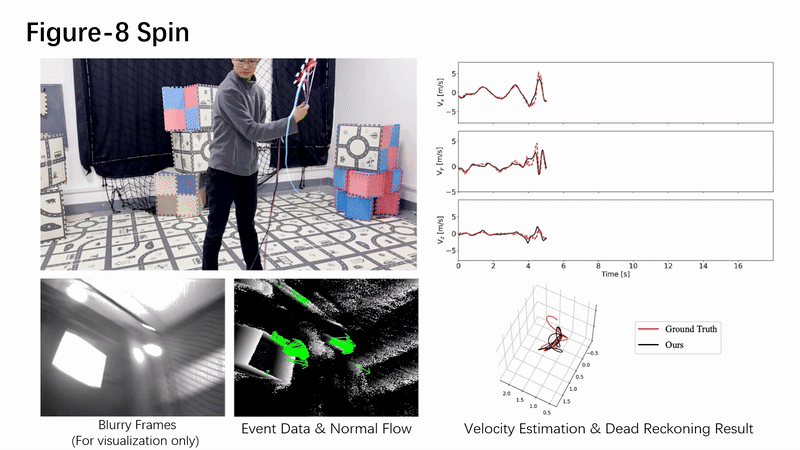

This paper innovatively proposes a high-speed motion state estimation method based on an event camera and an inertial measurement unit (IMU), achieving for the first time real-time linear velocity estimation relying solely on event streams and inertial data. It provides a new approach for precise localization in dynamic scenarios such as UAVs and high-speed robots. Starting from first principles, the research team proposed a novel "velometer"-like design. By fusing sparse normal flow from a stereo event camera with IMU data and utilizing a continuous-time B-spline model, it directly estimates instantaneous linear velocity, avoiding the dependency of traditional methods on feature matching or environmental structure. Experiments show that in real high-speed scenarios (such as drone sharp turns, tethered spinning, etc.), this method reduces velocity estimation error by over 50% compared to existing techniques while meeting real-time computational efficiency requirements.

Fig. 1 The motion capture system provides ground-truth trajectories for the event camera in this paper, assisting in validating the proposed method.

Paper Two

ESVO2: Direct Visual-Inertial Odometry With Stereo Event Cameras

Building upon previous research, Professor Yi Zhou's team made innovative improvements and proposed a stereo event-based visual-inertial odometry system, focusing on optimizing 3D mapping efficiency and pose estimation accuracy. The research findings, titled "ESVO2: Direct Visual-Inertial Odometry with Stereo Event Cameras," were published in the top robotics journal IEEE TRO.

Citation

J. Niu, S. Zhong, X. Lu, S. Shen, G. Gallego and Y. Zhou, "ESVO2: Direct Visual-Inertial Odometry With Stereo Event Cameras," in IEEE Transactions on Robotics, vol. 41, pp. 2164-2183, 2025, doi: 10.1109/TRO.2025.3548523.

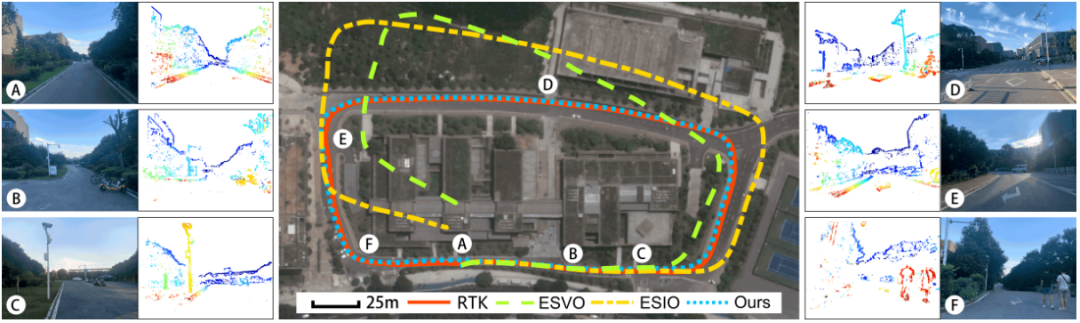

By designing a novel strategy for sampling contour points and fusing depth information from temporal and static stereo configurations, this study effectively enhances the completeness and computational efficiency of the mapping module. To address the degeneracy in estimating the pitch and yaw components of camera pose, this research introduces IMU measurements as motion priors and constructs a compact and robust back-end optimization framework to achieve real-time estimation of IMU biases and linear velocity. Experiments demonstrate that compared to existing mainstream algorithms, the proposed system exhibits higher localization accuracy and superior real-time performance in large-scale outdoor environments.

Fig. 2 Experimental results of localization within the Hunan University campus.

The motion capture system provides ground-truth trajectories for this paper, assisting in validating the proposed method.

Corresponding Author

Yi Zhou is a Professor and Doctoral Supervisor at the School of Robotics, Hunan University, and the Director of the NAIL Lab at Hunan University. He received his Ph.D. from the College of Engineering and Computer Science at the Australian National University in 2018 and is selected into the national high-level young talent program. His research primarily focuses on robot perception and navigation technologies based on advanced visual sensors, including high-speed autonomous robotics, visual odometry/SLAM, dynamic vision sensors (event-based vision), and multi-view geometry theory. His main research achievements have been published in authoritative robotics and computer vision journals and conferences such as IEEE T-RO, RSS, and ECCV. He currently serves as an Associate Editor for IEEE Robotics and Automation Letters (RA-L).

About the NAIL Lab: